Week 7: Generalization: Model Validation

[Powerpoint][jupyter][google colab][reveal]

Abstract: Generalization is the main objective of a machine learning algorithm. The models we design should work on data they have not seen before. Confirming whether a model generalizes well or not is the domain of model validation. In this lecture we introduce approaches to model validation such as hold out validation and cross validation.

Setup

notutils

This small package is a helper package for various notebook utilities used below.

The software can be installed using

%pip install notutilsfrom the command prompt where you can access your python installation.

The code is also available on GitHub: https://github.com/lawrennd/notutils

Once notutils is installed, it can be imported in the

usual manner.

import notutilspods

In Sheffield we created a suite of software tools for ‘Open Data Science’. Open data science is an approach to sharing code, models and data that should make it easier for companies, health professionals and scientists to gain access to data science techniques.

You can also check this blog post on Open Data Science.

The software can be installed using

%pip install podsfrom the command prompt where you can access your python installation.

The code is also available on GitHub: https://github.com/lawrennd/ods

Once pods is installed, it can be imported in the usual

manner.

import podsmlai

The mlai software is a suite of helper functions for

teaching and demonstrating machine learning algorithms. It was first

used in the Machine Learning and Adaptive Intelligence course in

Sheffield in 2013.

The software can be installed using

%pip install mlaifrom the command prompt where you can access your python installation.

The code is also available on GitHub: https://github.com/lawrennd/mlai

Once mlai is installed, it can be imported in the usual

manner.

import mlaiReview

- Last time: introduced basis functions.

- Showed how to maximize the likelihood of a non-linear model that’s linear in parameters.

- Explored the different characteristics of different basis function models

Alan Turing

|

|

Figure: Alan Turing, in 1946 he was only 11 minutes slower than the winner of the 1948 games. Would he have won a hypothetical games held in 1946? Source: Alan Turing Internet Scrapbook.

If we had to summarise the objectives of machine learning in one word, a very good candidate for that word would be generalization. What is generalization? From a human perspective it might be summarised as the ability to take lessons learned in one domain and apply them to another domain. If we accept the definition given in the first session for machine learning, \[ \text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction} \] then we see that without a model we can’t generalise: we only have data. Data is fine for answering very specific questions, like “Who won the Olympic Marathon in 2012?”, because we have that answer stored, however, we are not given the answer to many other questions. For example, Alan Turing was a formidable marathon runner, in 1946 he ran a time 2 hours 46 minutes (just under four minutes per kilometer, faster than I and most of the other Endcliffe Park Run runners can do 5 km). What is the probability he would have won an Olympics if one had been held in 1946?

To answer this question we need to generalize, but before we formalize the concept of generalization let’s introduce some formal representation of what it means to generalize in machine learning.

Expected Loss

Our objective function so far has been the negative log likelihood, which we have minimized (via the sum of squares error) to obtain our model. However, there is an alternative perspective on an objective function, that of a loss function. A loss function is a cost function associated with the penalty you might need to pay for a particular incorrect decision. One approach to machine learning involves specifying a loss function and considering how much a particular model is likely to cost us across its lifetime. We can represent this with an expectation. If our loss function is given as \(L(y, x, \mathbf{ w})\) for a particular model that predicts \(y\) given \(x\) and \(\mathbf{ w}\) then we are interested in minimizing the expected loss under the likely distribution of \(y\) and \(x\). To understand this formally we define the true distribution of the data samples, \(y\), \(x\). This is a particular distribution that we don’t typically have access to. To represent it we define a variant of the letter ‘P’, \(\mathbb{P}(y, x)\). If we genuinely pay \(L(y, x, \mathbf{ w})\) for every mistake we make, and the future test data is genuinely drawn from \(\mathbb{P}(y, x)\) then we can define our expected loss, or risk, to be, \[ R(\mathbf{ w}) = \int L(y, x, \mathbf{ w}) \mathbb{P}(y, x) \text{d}y \text{d}x. \] Of course, in practice, this value can’t be computed but it serves as a reminder of what it is we are aiming to minimize and under certain circumstances it can be approximated.

Sample-Based Approximations

A sample-based approximation to an expectation involves replacing the true expectation with a sum over samples from the distribution. \[ \int f(z) p(z) \text{d}z\approx \frac{1}{s}\sum_{i=1}^s f(z_i). \] if \(\{z_i\}_{i=1}^s\) are a set of \(s\) independent and identically distributed samples from the distribution \(p(z)\). This approximation becomes better for larger \(s\), although the rate of convergence to the true integral will be very dependent on the distribution \(p(z)\) and the function \(f(z)\).

That said, this means we can approximate our true integral with the sum, \[ R(\mathbf{ w}) \approx \frac{1}{n}\sum_{i=1}^{n} L(y_i, x_i, \mathbf{ w}). \]

if \(y_i\) and \(x_i\) are independent samples from the true distribution \(\mathbb{P}(y, x)\). Minimizing this sum directly is known as empirical risk minimization. The sum of squares error we have been using can be recovered for this case by considering a squared loss, \[ L(y, x, \mathbf{ w}) = (y-\mathbf{ w}^\top\boldsymbol{\phi}(x))^2, \] which gives an empirical risk of the form \[ R(\mathbf{ w}) \approx \frac{1}{n} \sum_{i=1}^{n} (y_i - \mathbf{ w}^\top \boldsymbol{\phi}(x_i))^2 \] which up to the constant \(\frac{1}{n}\) is identical to the objective function we have been using so far.

Estimating Risk through Validation

Unfortuantely, minimising the empirial risk only guarantees something about our performance on the training data. If we don’t have enough data for the approximation to the risk to be valid, then we can end up performing significantly worse on test data. Fortunately, we can also estimate the risk for test data through estimating the risk for unseen data. The main trick here is to ‘hold out’ a portion of our data from training and use the models performance on that sub-set of the data as a proxy for the true risk. This data is known as ‘validation’ data. It contrasts with test data, because its values are known at the model design time. However, in contrast to test date we don’t use it to fit our model. This means that it doesn’t exhibit the same bias that the empirical risk does when estimating the true risk.

Validation

In this lab we will explore techniques for model selection that make use of validation data. Data that isn’t seen by the model in the learning (or fitting) phase, but is used to validate our choice of model from amoungst the different designs we have selected.

In machine learning, we are looking to minimise the value of our objective function \(E\) with respect to its parameters \(\mathbf{ w}\). We do this by considering our training data. We minimize the value of the objective function as it’s observed at each training point. However we are really interested in how the model will perform on future data. For evaluating that we choose to hold out a portion of the data for evaluating the quality of the model.

We will review the different methods of model selection on the Olympics marathon data. Firstly we import the Olympic marathon data.

Olympic Marathon Data

|

|

The first thing we will do is load a standard data set for regression modelling. The data consists of the pace of Olympic Gold Medal Marathon winners for the Olympics from 1896 to present. Let’s load in the data and plot.

import numpy as np

import podsdata = pods.datasets.olympic_marathon_men()

x = data['X']

y = data['Y']

offset = y.mean()

scale = np.sqrt(y.var())

yhat = (y - offset)/scale

Figure: Olympic marathon pace times since 1896.

Things to notice about the data include the outlier in 1904, in that year the Olympics was in St Louis, USA. Organizational problems and challenges with dust kicked up by the cars following the race meant that participants got lost, and only very few participants completed. More recent years see more consistently quick marathons.

Validation on the Olympic Marathon Data

The first thing we’ll do is fit a standard linear model to the data. We recall from previous lectures and lab classes that to do this we need to solve the system \[ \boldsymbol{ \Phi}^\top \boldsymbol{ \Phi}\mathbf{ w}= \boldsymbol{ \Phi}^\top \mathbf{ y} \] for \(\mathbf{ w}\) and use the resulting vector to make predictions at the training points and test points, \[ \mathbf{ f}= \boldsymbol{ \Phi}\mathbf{ w}. \] The prediction function can be used to compute the objective function, \[ E(\mathbf{ w}) = \sum_{i}^{n} (y_i - \mathbf{ w}^\top\phi(\mathbf{ y}_i))^2 \] by substituting in the prediction in vector form we have \[ E(\mathbf{ w}) = (\mathbf{ y}- \mathbf{ f})^\top(\mathbf{ y}- \mathbf{ f}) \]

Exercise 1

In this question you will construct some flexible general code for fitting linear models.

Create a python function that computes \(\boldsymbol{ \Phi}\) for the linear basis,

\[\boldsymbol{ \Phi}= \begin{bmatrix}

\mathbf{ y}& \mathbf{1}\end{bmatrix}\] Name your function

linear. Phi should be in the form of a

design matrix and x should be in the form of a

numpy two dimensional array with \(n\) rows and 1 column Calls to your

function should be in the following form:

Phi = linear(x)

Create a python function that accepts, as arguments, a python

function that defines a basis (like the one you’ve just created called

linear) as well as a set of inputs and a vector of

parameters. Your new python function should return a prediction. Name

your function prediction. The return value f

should be a two dimensional numpy array with \(n\) rows and \(1\) column, where \(n\) is the number of data points. Calls to

your function should be in the following form:

f = prediction(w, x, linear)

Create a python function that computes the sum of squares objective

function (or error function). It should accept your input data (or

covariates) and target data (or response variables) and your parameter

vector w as arguments. It should also accept a python

function that represents the basis. Calls to your function should be in

the following form:

e = objective(w, x, y, linear)

Create a function that solves the linear system for the set of parameters that minimizes the sum of squares objective. It should accept input data, target data and a python function for the basis as the inputs. Calls to your function should be in the following form:

w = fit(x, y, linear)

Fit a linear model to the olympic data using these functions and plot the resulting prediction between 1890 and 2020. Set the title of the plot to be the error of the fit on the training data.

Polynomial Fit: Training Error

Exercise 2

In this question we extend the code above to a non- linear basis (a quadratic function).

Start by creating a python-function called quadratic. It

should compute the quadratic basis. \[

\boldsymbol{ \Phi}= \begin{bmatrix} \mathbf{1} & \mathbf{ y}&

\mathbf{ y}^2\end{bmatrix}

\] It should be called in the following form:

Phi = quadratic(x)

Use this to compute the quadratic fit for the model, again plotting the result titled by the error.

Polynomial Fits to Olympics Data

Figure: Polynomial fit to olympic data with 26 basis functions.

Hold Out Validation on Olympic Marathon Data

Figure: Olympic marathon data with validation error for extrapolation.

Extrapolation

Interpolation

Figure: Olympic marathon data with validation error for interpolation.

Choice of Validation Set

Hold Out Data

You have a conclusion as to which model fits best under the training error, but how do the two models perform in terms of validation? In this section we consider hold out validation. In hold out validation we remove a portion of the training data for validating the model on. The remaining data is used for fitting the model (training). Because this is a time series prediction, it makes sense for us to hold out data at the end of the time series. This means that we are validating on future predictions. We will hold out data from after 1980 and fit the model to the data before 1980.

# select indices of data to 'hold out'

indices_hold_out = np.flatnonzero(x>1980)

# Create a training set

x_train = np.delete(x, indices_hold_out, axis=0)

y_train = np.delete(y, indices_hold_out, axis=0)

# Create a hold out set

x_valid = np.take(x, indices_hold_out, axis=0)

y_valid = np.take(y, indices_hold_out, axis=0)Exercise 3

For both the linear and quadratic models, fit the model to the data up until 1980 and then compute the error on the held out data (from 1980 onwards). Which model performs better on the validation data?

Richer Basis Set

Now we have an approach for deciding which model to retain, we can consider the entire family of polynomial bases, with arbitrary degrees.

Exercise 4

Now we are going to build a more sophisticated form of basis function, one that can accept arguments to its inputs (similar to those we used in this lab). Here we will start with a polynomial basis.

def polynomial(x, degree, loc, scale):

degrees =np.arange(degree+1)

return ((x-loc)/scale)**degreesThe basis as we’ve defined it has three arguments as well as the

input. The degree of the polynomial, the scale of the polynomial and the

offset. These arguments need to be passed to the basis functions

whenever they are called. Modify your code to pass these additional

arguments to the python function for creating the basis. Do this for

each of your functions predict, fit and

objective. You will find *args (or

**kwargs) useful.

Write code that tries to fit different models to the data with

polynomial basis. Use a maximum degree for your basis from 0 to 17. For

each polynomial store the hold out validation error and the

training error. When you have finished the computation plot the

hold out error for your models and the training error for your p. When

computing your polynomial basis use offset=1956. and

scale=120. to ensure that the data is mapped (roughly) to

the -1, 1 range.

Which polynomial has the minimum training error? Which polynomial has the minimum validation error?

Leave One Out Validation

Hold out validation uses a portion of the data to hold out and a portion of the data to train on. There is always a compromise between how much data to hold out and how much data to train on. The more data you hold out, the better the estimate of your performance at ‘run-time’ (when the model is used to make predictions in real applications). However, by holding out more data, you leave less data to train on, so you have a better validation, but a poorer quality model fit than you could have had if you’d used all the data for training. Leave one out cross validation leaves as much data in the training phase as possible: you only take one point out for your validation set. However, if you do this for hold-out validation, then the quality of your validation error is very poor because you are testing the model quality on one point only. In cross validation the approach is to improve this estimate by doing more than one model fit. In leave one out cross validation you fit \(n\) different models, where \(n\) is the number of your data. For each model fit you take out one data point, and train the model on the remaining \(n-1\) data points. You validate the model on the data point you’ve held out, but you do this \(n\) times, once for each different model. You then take the average of all the \(n\) badly estimated hold out validation errors. The average of this estimate is a good estimate of performance of those models on the test data.

Exercise 5

Write code that computes the leave one out validation error

for the olympic data and the polynomial basis. Use the functions you

have created above: objective, fit,

polynomial. Compute the leave-one-out cross

validation error for basis functions containing a maximum degree from 0

to 17.

\(k\)-fold Cross Validation

Leave one out cross validation produces a very good estimate of the performance at test time, and is particularly useful if you don’t have a lot of data. In these cases you need to make as much use of your data for model fitting as possible, and having a large hold out data set (to validate model performance) can have a significant effect on the size of the data set you have to fit your model, and correspondingly, the complexity of the model you can fit. However, leave one out cross validation involves fitting \(n\) models, where \(n\) is your number of training data. For the olympics example, this is only 27 model fits, but in practice many data sets consist thousands or millions of data points, and fitting many millions of models for estimating validation error isn’t really practical. One option is to return to hold out validation, but another approach is to perform \(k\)-fold cross validation. In \(k\)-fold cross validation you split your data into \(k\) parts. Then you use \(k-1\) of those parts for training, and hold out one part for validation. Just like we did for the hold out validation above. In cross validation, however, you repeat this process. You swap the part of the data you just used for validation back in to the training set and select another part for validation. You then fit the model to the new training data and validate on the portion of data you’ve just extracted. Each split of training/validation data is called a fold and since you do this process \(k\) times, the procedure is known as \(k\)-fold cross validation. The term cross refers to the fact that you cross over your validation portion back into the training data every time you perform a fold.

Exercise 6

Perform \(k\)-fold cross validation on the olympic data with your polynomial basis. Use \(k\) set to 5 (e.g. five fold cross validation). Do the different forms of validation select different models? Does five fold cross validation always select the same model?

Note: The data doesn’t divide into 5 equal size partitions

for the five fold cross validation error. Don’t worry about this too

much. Two of the partitions will have an extra data point. You might

find np.random.permutation? useful.

Bias Variance Decomposition

One of Breiman’s ideas for improving predictive performance is known as bagging (Breiman:bagging96?). The idea is to train a number of models on the data such that they overfit (high variance). Then average the predictions of these models. The models are trained on different bootstrap samples (Efron, 1979) and their predictions are aggregated giving us the acronym, Bagging. By combining decision trees with bagging, we recover random forests (Breiman, 2001).

Bias and variance can also be estimated through Efron’s bootstrap (Efron, 1979), and the traditional view has been that there’s a form of Goldilocks effect, where the best predictions are given by the model that is ‘just right’ for the amount of data available. Not to simple, not too complex. The idea is that bias decreases with increasing model complexity and variance increases with increasing model complexity. Typically plots begin with the Mummy bear on the left (too much bias) end with the Daddy bear on the right (too much variance) and show a dip in the middle where the Baby bear (just) right finds themselves.

The Daddy bear is typically positioned at the point where the model can exactly interpolate the data. For a generalized linear model (McCullagh and Nelder, 1989), this is the point at which the number of parameters is equal to the number of data1.

The bias-variance decomposition (Geman:biasvariance92?) considers the expected test error for different variations of the training data sampled from, \(\mathbb{P}(\mathbf{ x}, y)\) \[\begin{align*} R(\mathbf{ w}) = & \int \left(y- f^*(\mathbf{ x})\right)^2 \mathbb{P}(y, \mathbf{ x}) \text{d}y\text{d}\mathbf{ x}\\ & \triangleq \mathbb{E}\left[ \left(y- f^*(\mathbf{ x})\right)^2 \right]. \end{align*}\]

This can be decomposed into two parts, \[ \begin{align*} \mathbb{E}\left[ \left(y- f(\mathbf{ x})\right)^2 \right] = & \text{bias}\left[f^*(\mathbf{ x})\right]^2 + \text{variance}\left[f^*(\mathbf{ x})\right] +\sigma^2, \end{align*} \] where the bias is given by \[ \text{bias}\left[f^*(\mathbf{ x})\right] = \mathbb{E}\left[f^*(\mathbf{ x})\right] - f(\mathbf{ x}) \] and it summarizes error that arises from the model’s inability to represent the underlying complexity of the data. For example, if we were to model the marathon pace of the winning runner from the Olympics by computing the average pace across time, then that model would exhibit bias error because the reality of Olympic marathon pace is it is changing (typically getting faster).

The variance term is given by \[ \text{variance}\left[f^*(\mathbf{ x})\right] = \mathbb{E}\left[\left(f^*(\mathbf{ x}) - \mathbb{E}\left[f^*(\mathbf{ x})\right]\right)^2\right]. \] The variance term is often described as arising from a model that is too complex, but we must be careful with this idea. Is the model really too complex relative to the real world that generates the data? The real world is a complex place, and it is rare that we are constructing mathematical models that are more complex than the world around us. Rather, the ‘too complex’ refers to ability to estimate the parameters of the model given the data we have. Slight variations in the training set cause changes in prediction.

Models that exhibit high variance are sometimes said to ‘overfit’ the data whereas models that exhibit high bias are sometimes described as ‘underfitting’ the data.

Bias vs Variance Error Plots

Helper function for sampling data from two different classes.

import numpy as npHelper function for plotting the decision boundary of the SVM.

import urllib.requesturllib.request.urlretrieve('https://raw.githubusercontent.com/lawrennd/talks/gh-pages/mlai.py','mlai.py')import matplotlib

font = {'family' : 'sans',

'weight' : 'bold',

'size' : 22}

matplotlib.rc('font', **font)

import matplotlib.pyplot as pltfrom sklearn import svm# Create an instance of SVM and fit the data.

C = 100.0 # SVM regularization parameter

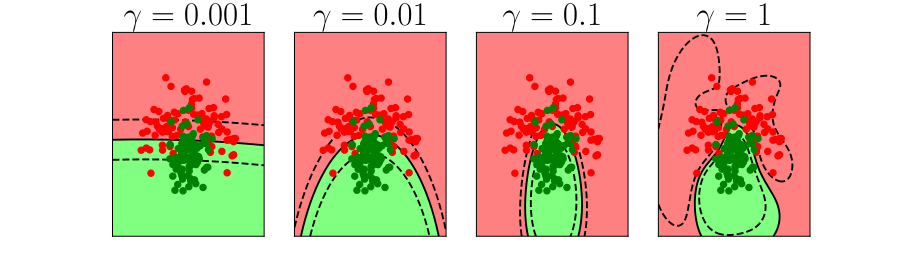

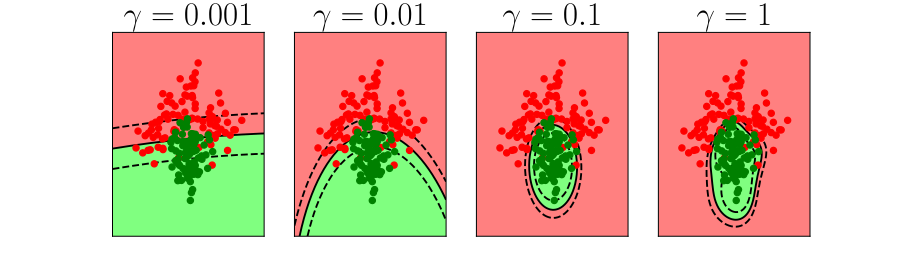

gammas = [0.001, 0.01, 0.1, 1]

per_class=30

num_samps = 20

# Set-up 2x2 grid for plotting.

fig, ax = plt.subplots(1, 4, figsize=(10,3))

xlim=None

ylim=None

for samp in range(num_samps):

X, y=create_data(per_class)

models = []

titles = []

for gamma in gammas:

models.append(svm.SVC(kernel='rbf', gamma=gamma, C=C))

titles.append('$\gamma={}$'.format(gamma))

models = (cl.fit(X, y) for cl in models)

xlim, ylim = decision_boundary_plot(models, X, y,

axs=ax,

filename='bias-variance{samp:0>3}.svg'.format(samp=samp),

directory='./ml'

titles=titles,

xlim=xlim,

ylim=ylim)

Figure: In each figure the simpler model is on the left, and the more complex model is on the right. Each fit is done to a different version of the data set. The simpler model is more consistent in its errors (bias error), whereas the more complex model is varying in its errors (variance error).

Further Reading

- Section 1.5 of Rogers and Girolami (2011)

Thanks!

For more information on these subjects and more you might want to check the following resources.

- twitter: @lawrennd

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com