Week 4: AI and Data Science

[jupyter][google colab][reveal]

Abstract:

In the first lecture, we laid out the underpinning phenomena that give us the landscape of data science. In this lecture we unpick the challenges that landscape presents us with. The material gives you context for why data science is very different from standard software engineering, and how data science problems need to be approached including the many different aspects that need to be considered. We will look at the challenges of deploying data science solutions in practice. We categorize them into three groups.

Introduction

Data science is an emerging discipline. That makes it harder to make clean decisions about what any given individual will need to know to become a data scientist. Those of you who are studying now will be those that define the discipline. As we deploy more data driven decision making in the world, the role will be refined. Until we achieve that refinement, your knowledge needs to be broad based.

In this lecture we will first continue our theme of how our limitations as humans mean that our analysis of data can be affected, and I will introduce an analogy that should help you understand how data science differs significantly from traditional software engineering.

notutils

This small package is a helper package for various notebook utilities used below.

The software can be installed using

%pip install notutilsfrom the command prompt where you can access your python installation.

The code is also available on GitHub: https://github.com/lawrennd/notutils

Once notutils is installed, it can be imported in the

usual manner.

import notutilsmlai

The mlai software is a suite of helper functions for

teaching and demonstrating machine learning algorithms. It was first

used in the Machine Learning and Adaptive Intelligence course in

Sheffield in 2013.

The software can be installed using

%pip install mlaifrom the command prompt where you can access your python installation.

The code is also available on GitHub: https://github.com/lawrennd/mlai

Once mlai is installed, it can be imported in the usual

manner.

import mlai

from mlai import plotpods

In Sheffield we created a suite of software tools for ‘Open Data Science’. Open data science is an approach to sharing code, models and data that should make it easier for companies, health professionals and scientists to gain access to data science techniques.

You can also check this blog post on Open Data Science.

The software can be installed using

%pip install podsfrom the command prompt where you can access your python installation.

The code is also available on GitHub: https://github.com/lawrennd/ods

Once pods is installed, it can be imported in the usual

manner.

import podsStatistics to Deep Learning

What is Machine Learning?

Machine learning allows us to extract knowledge from data to form a prediction.

\[\text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\]

A machine learning prediction is made by combining a model with data to form the prediction. The manner in which this is done gives us the machine learning algorithm.

Machine learning models are mathematical models which make weak assumptions about data, e.g. smoothness assumptions. By combining these assumptions with the data, we observe we can interpolate between data points or, occasionally, extrapolate into the future.

Machine learning is a technology which strongly overlaps with the methodology of statistics. From a historical/philosophical view point, machine learning differs from statistics in that the focus in the machine learning community has been primarily on accuracy of prediction, whereas the focus in statistics is typically on the interpretability of a model and/or validating a hypothesis through data collection.

The rapid increase in the availability of compute and data has led to the increased prominence of machine learning. This prominence is surfacing in two different but overlapping domains: data science and artificial intelligence.

From Model to Decision

The real challenge, however, is end-to-end decision making. Taking information from the environment and using it to drive decision making to achieve goals.

Classical Statistical Analysis

Despite the shift of emphasis, traditional statistical techniques are more important than ever. One of the few ways we have to validate the analyses we create is to make use of visualizations, randomized testing and other forms of statistical analysis. You will have explored some of these ideas in earlier courses in machine learning. In this unit we provide some review material in a practical sheet to bring some of those ideas together in the context of data science.

What is Machine Learning?

What is machine learning? At its most basic level machine learning is a combination of

\[\text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\]

where data is our observations. They can be actively or passively acquired (meta-data). The model contains our assumptions, based on previous experience. That experience can be other data, it can come from transfer learning, or it can merely be our beliefs about the regularities of the universe. In humans our models include our inductive biases. The prediction is an action to be taken or a categorization or a quality score. The reason that machine learning has become a mainstay of artificial intelligence is the importance of predictions in artificial intelligence. The data and the model are combined through computation.

In practice we normally perform machine learning using two functions. To combine data with a model we typically make use of:

a prediction function it is used to make the predictions. It includes our beliefs about the regularities of the universe, our assumptions about how the world works, e.g., smoothness, spatial similarities, temporal similarities.

an objective function it defines the ‘cost’ of misprediction. Typically, it includes knowledge about the world’s generating processes (probabilistic objectives) or the costs we pay for mispredictions (empirical risk minimization).

The combination of data and model through the prediction function and the objective function leads to a learning algorithm. The class of prediction functions and objective functions we can make use of is restricted by the algorithms they lead to. If the prediction function or the objective function are too complex, then it can be difficult to find an appropriate learning algorithm. Much of the academic field of machine learning is the quest for new learning algorithms that allow us to bring different types of models and data together.

A useful reference for state of the art in machine learning is the UK Royal Society Report, Machine Learning: Power and Promise of Computers that Learn by Example.

You can also check my post blog post on What is Machine Learning?.

Artificial Intelligence and Data Science

Machine learning technologies have been the driver of two related, but distinct disciplines. The first is data science. Data science is an emerging field that arises from the fact that we now collect so much data by happenstance, rather than by experimental design. Classical statistics is the science of drawing conclusions from data, and to do so statistical experiments are carefully designed. In the modern era we collect so much data that there’s a desire to draw inferences directly from the data.

As well as machine learning, the field of data science draws from statistics, cloud computing, data storage (e.g. streaming data), visualization and data mining.

In contrast, artificial intelligence technologies typically focus on emulating some form of human behaviour, such as understanding an image, or some speech, or translating text from one form to another. The recent advances in artificial intelligence have come from machine learning providing the automation. But in contrast to data science, in artificial intelligence the data is normally collected with the specific task in mind. In this sense it has strong relations to classical statistics.

Classically artificial intelligence worried more about logic and planning and focused less on data driven decision making. Modern machine learning owes more to the field of Cybernetics (Wiener, 1948) than artificial intelligence. Related fields include robotics, speech recognition, language understanding and computer vision.

There are strong overlaps between the fields, the wide availability of data by happenstance makes it easier to collect data for designing AI systems. These relations are coming through wide availability of sensing technologies that are interconnected by cellular networks, WiFi and the internet. This phenomenon is sometimes known as the Internet of Things, but this feels like a dangerous misnomer. We must never forget that we are interconnecting people, not things.

What does Machine Learning do?

Any process of automation allows us to scale what we do by codifying a process in some way that makes it efficient and repeatable. Machine learning automates by emulating human (or other actions) found in data. Machine learning codifies in the form of a mathematical function that is learnt by a computer. If we can create these mathematical functions in ways in which they can interconnect, then we can also build systems.

Machine learning works through codifying a prediction of interest into a mathematical function. For example, we can try and predict the probability that a customer wants to by a jersey given knowledge of their age, and the latitude where they live. The technique known as logistic regression estimates the odds that someone will by a jumper as a linear weighted sum of the features of interest.

\[ \text{odds} = \frac{p(\text{bought})}{p(\text{not bought})} \]

\[ \log \text{odds} = w_0 + w_1 \text{age} + w_2 \text{latitude}.\] Here \(w_0\), \(w_1\) and \(w_2\) are the parameters of the model. If \(w_1\) and \(w_2\) are both positive, then the log-odds that someone will buy a jumper increase with increasing latitude and age, so the further north you are and the older you are the more likely you are to buy a jumper. The parameter \(w_0\) is an offset parameter and gives the log-odds of buying a jumper at zero age and on the equator. It is likely to be negative1 indicating that the purchase is odds-against. This is also a classical statistical model, and models like logistic regression are widely used to estimate probabilities from ad-click prediction to disease risk.

This is called a generalized linear model, we can also think of it as estimating the probability of a purchase as a nonlinear function of the features (age, latitude) and the parameters (the \(w\) values). The function is known as the sigmoid or logistic function, thus the name logistic regression.

Sigmoid Function

Figure: The logistic function.

The function has this characeristic ‘s’-shape (from where the term sigmoid, as in sigma, comes from). It also takes the input from the entire real line and ‘squashes’ it into an output that is between zero and one. For this reason it is sometimes also called a ‘squashing function’.

The sigmoid comes from the inverting the odds ratio, \[ \frac{\pi}{(1-\pi)} \] where \(\pi\) is the probability of a positive outcome and \(1-\pi\) is the probability of a negative outcome

\[ p(\text{bought}) = \sigma\left(w_0 + w_1 \text{age} + w_2 \text{latitude}\right).\]

In the case where we have features to help us predict, we sometimes denote such features as a vector, \(\mathbf{ x}\), and we then use an inner product between the features and the parameters, \(\mathbf{ w}^\top \mathbf{ x}= w_1 x_1 + w_2 x_2 + w_3 x_3 ...\), to represent the argument of the sigmoid.

\[ p(\text{bought}) = \sigma\left(\mathbf{ w}^\top \mathbf{ x}\right).\] More generally, we aim to predict some aspect of our data, \(y\), by relating it through a mathematical function, \(f(\cdot)\), to the parameters, \(\mathbf{ w}\) and the data, \(\mathbf{ x}\).

\[ y= f\left(\mathbf{ x}, \mathbf{ w}\right).\] We call \(f(\cdot)\) the prediction function.

To obtain the fit to data, we use a separate function called the objective function that gives us a mathematical representation of the difference between our predictions and the real data.

\[E(\mathbf{ w}, \mathbf{Y}, \mathbf{X})\] A commonly used examples (for example in a regression problem) is least squares, \[E(\mathbf{ w}, \mathbf{Y}, \mathbf{X}) = \sum_{i=1}^n\left(y_i - f(\mathbf{ x}_i, \mathbf{ w})\right)^2.\]

If a linear prediction function is combined with the least squares objective function, then that gives us a classical linear regression, another classical statistical model. Statistics often focusses on linear models because it makes interpretation of the model easier. Interpretation is key in statistics because the aim is normally to validate questions by analysis of data. Machine learning has typically focused more on the prediction function itself and worried less about the interpretation of parameters. In statistics, where interpretation is typically more important than prediction, parameters are normally denoted by \(\boldsymbol{\beta}\) instead of \(\mathbf{ w}\).

A key difference between statistics and machine learning, is that (traditionally) machine learning has focussed on predictive capability and statistics has focussed on interpretability. That means that in a statistics class far more emphasis will be placed on interpretation of the parameters. In machine learning, the parameters, $, are just a means to an end. But in statistics, when we denote the parameters by \(\boldsymbol{\beta}\), we often use the parameters to tell us something about the disease.

So we move between \[ p(\text{bought}) = \sigma\left(w_0 + w_1 \text{age} + w_2 \text{latitude}\right).\]

to denote the emphasis is on predictive power to

\[ p(\text{bought}) = \sigma\left(\beta_0 + \beta_1 \text{age} + \beta_2 \text{latitude}\right).\]

to denote the emphasis is on interpretation of the parameters.

Another effect of the focus on prediction in machine learning is that non-linear approaches, which can be harder to interpret, are more widely deployedin machine learning – they tend to improve quality of predictions at the expense of interpretability.

Logistic Regression

A logistic regression is an approach to classification which extends the linear basis function models we’ve already explored. Rather than modeling the output of the function directly the assumption is that we model the log-odds with the basis functions.

The odds are defined as the ratio of the probability of a positive outcome, to the probability of a negative outcome. If the probability of a positive outcome is denoted by \(\pi\), then the odds are computed as \(\frac{\pi}{1-\pi}\). Odds are widely used by bookmakers in gambling, although a bookmakers odds won’t normalise: i.e. if you look at the equivalent probabilities, and sum over the probability of all outcomes the bookmakers are considering, then you won’t get one. This is how the bookmaker makes a profit. Because a probability is always between zero and one, the odds are always between \(0\) and \(\infty\). If the positive outcome is unlikely the odds are close to zero, if it is very likely then the odds become close to infinite. Taking the logarithm of the odds maps the odds from the positive half space to being across the entire real line. Odds that were between 0 and 1 (where the negative outcome was more likely) are mapped to the range between \(-\infty\) and \(0\). Odds that are greater than 1 are mapped to the range between \(0\) and \(\infty\). Considering the log odds therefore takes a number between 0 and 1 (the probability of positive outcome) and maps it to the entire real line. The function that does this is known as the logit function, \(g^{-1}(p_i) = \log\frac{p_i}{1-p_i}\). This function is known as a link function.

For a standard regression we take, \[ f(\mathbf{ x}) = \mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}), \] if we want to perform classification we perform a logistic regression. \[ \log \frac{\pi}{(1-\pi)} = \mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}) \] where the odds ratio between the positive class and the negative class is given by \[ \frac{\pi}{(1-\pi)} \] The odds can never be negative, but can take any value from 0 to \(\infty\). We have defined the link function as taking the form \(g^{-1}(\cdot)\) implying that the inverse link function is given by \(g(\cdot)\). Since we have defined, \[ g^{-1}(\pi) = \mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}) \] we can write \(\pi\) in terms of the inverse link function, \(g(\cdot)\) as \[ \pi = g(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})). \]

Basis Function

We’ll define our prediction, objective and gradient functions below. But before we start, we need to define a basis function for our model. Let’s start with the linear basis.

import numpy as npimport mlai

from mlai import linearPrediction Function

Now we have the basis function let’s define the prediction function.

import numpy as npdef predict(w, x, basis=linear, **kwargs):

"Generates the prediction function and the basis matrix."

Phi = basis(x, **kwargs)

f = np.dot(Phi, w)

return 1./(1+np.exp(-f)), PhiThis inverse of the link function is known as the logistic (thus the name logistic regression) or sometimes it is called the sigmoid function. For a particular value of the input to the link function, \(f_i = \mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}_i)\) we can plot the value of the inverse link function as below.

By replacing the inverse link with the sigmoid we can write \(\pi\) as a function of the input and the parameter vector as, \[ \pi(\mathbf{ x},\mathbf{ w}) = \frac{1}{1+\exp\left(-\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\right)}. \] The process for logistic regression is as follows. Compute the output of a standard linear basis function composition (\(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\), as we did for linear regression) and then apply the inverse link function, \(g(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}))\). In logistic regression this involves squashing it with the logistic (or sigmoid) function. Use this value, which now has an interpretation as a probability in a Bernoulli distribution to form the likelihood. Then we can assume conditional independence of each data point given the parameters and develop a likelihod for the entire data set.

As we discussed last time, the Bernoulli likelihood is of the form, \[ P(y_i|\mathbf{ w}, \mathbf{ x}) = \pi_i^{y_i} (1-\pi_i)^{1-y_i} \] which we can think of as clever trick for mathematically switching between two probabilities if we were to write it as code it would be better described as

def bernoulli(x, y, pi):

if y == 1:

return pi(x)

else:

return 1-pi(x)but writing it mathematically makes it easier to write our objective function within a single mathematical equation.

Maximum Likelihood

To obtain the parameters of the model, we need to maximize the likelihood, or minimize the objective function, normally taken to be the negative log likelihood. With a data conditional independence assumption the likelihood has the form, \[ P(\mathbf{ y}|\mathbf{ w}, \mathbf{X}) = \prod_{i=1}^nP(y_i|\mathbf{ w}, \mathbf{ x}_i). \] which can be written as a log likelihood in the form \[ \log P(\mathbf{ y}|\mathbf{ w}, \mathbf{X}) = \sum_{i=1}^n\log P(y_i|\mathbf{ w}, \mathbf{ x}_i) = \sum_{i=1}^n y_i \log \pi_i + \sum_{i=1}^n(1-y_i)\log (1-\pi_i) \] and if we take the probability of positive outcome for the \(i\)th data point to be given by \[ \pi_i = g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}_i)\right), \] where \(g(\cdot)\) is the inverse link function, then this leads to an objective function of the form, \[ E(\mathbf{ w}) = - \sum_{i=1}^ny_i \log g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}_i)\right) - \sum_{i=1}^n(1-y_i)\log \left(1-g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}_i)\right)\right). \]

import numpy as npdef objective(g, y):

"Computes the objective function."

labs = np.asarray(y, dtype=float).flatten()

posind = np.where(labs==1)

negind = np.where(labs==0)

return -np.log(g[posind, :]).sum() - np.log(1-g[negind, :]).sum()As normal, we would like to minimize this objective. This can be done by differentiating with respect to the parameters of our prediction function, \(\pi(\mathbf{ x};\mathbf{ w})\), for optimisation. The gradient of the likelihood with respect to \(\pi(\mathbf{ x};\mathbf{ w})\) is of the form, \[ \frac{\text{d}E(\mathbf{ w})}{\text{d}\mathbf{ w}} = -\sum_{i=1}^n \frac{y_i}{g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\right)}\frac{\text{d}g(f_i)}{\text{d}f_i} \boldsymbol{ \phi}(\mathbf{ x}_i) + \sum_{i=1}^n \frac{1-y_i}{1-g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\right)}\frac{\text{d}g(f_i)}{\text{d}f_i} \boldsymbol{ \phi}(\mathbf{ x}_i) \] where we used the chain rule to develop the derivative in terms of \(\frac{\text{d}g(f_i)}{\text{d}f_i}\), which is the gradient of the inverse link function (in our case the gradient of the sigmoid function).

So the objective function now depends on the gradient of the inverse link function, as well as the likelihood depends on the gradient of the inverse link function, as well as the gradient of the log likelihood, and naturally the gradient of the argument of the inverse link function with respect to the parameters, which is simply \(\boldsymbol{ \phi}(\mathbf{ x}_i)\).

The only missing term is the gradient of the inverse link function. For the sigmoid squashing function we have, \[\begin{align*} g(f_i) &= \frac{1}{1+\exp(-f_i)}\\ &=(1+\exp(-f_i))^{-1} \end{align*}\] and the gradient can be computed as \[\begin{align*} \frac{\text{d}g(f_i)}{\text{d} f_i} & = \exp(-f_i)(1+\exp(-f_i))^{-2}\\ & = \frac{1}{1+\exp(-f_i)} \frac{\exp(-f_i)}{1+\exp(-f_i)} \\ & = g(f_i) (1-g(f_i)) \end{align*}\] so the full gradient can be written down as \[ \frac{\text{d}E(\mathbf{ w})}{\text{d}\mathbf{ w}} = -\sum_{i=1}^n y_i\left(1-g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\right)\right) \boldsymbol{ \phi}(\mathbf{ x}_i) + \sum_{i=1}^n (1-y_i)\left(g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\right)\right) \boldsymbol{ \phi}(\mathbf{ x}_i). \]

import numpy as npdef gradient(g, Phi, y):

"Generates the gradient of the parameter vector."

labs = np.asarray(y, dtype=float).flatten()

posind = np.where(labs==1)

dw = -(Phi[posind]*(1-g[posind])).sum(0)

negind = np.where(labs==0 )

dw += (Phi[negind]*g[negind]).sum(0)

return dw[:, None]Optimization of the Function

Reorganizing the gradient to find a stationary point of the function with respect to the parameters \(\mathbf{ w}\) turns out to be impossible. Optimization has to proceed by numerical methods. Options include the multidimensional variant of Newton’s method or gradient based optimization methods like we used for optimizing matrix factorization for the movie recommender system. We recall from matrix factorization that, for large data, stochastic gradient descent or the Robbins Munro (Robbins and Monro, 1951) optimization procedure worked best for function minimization.

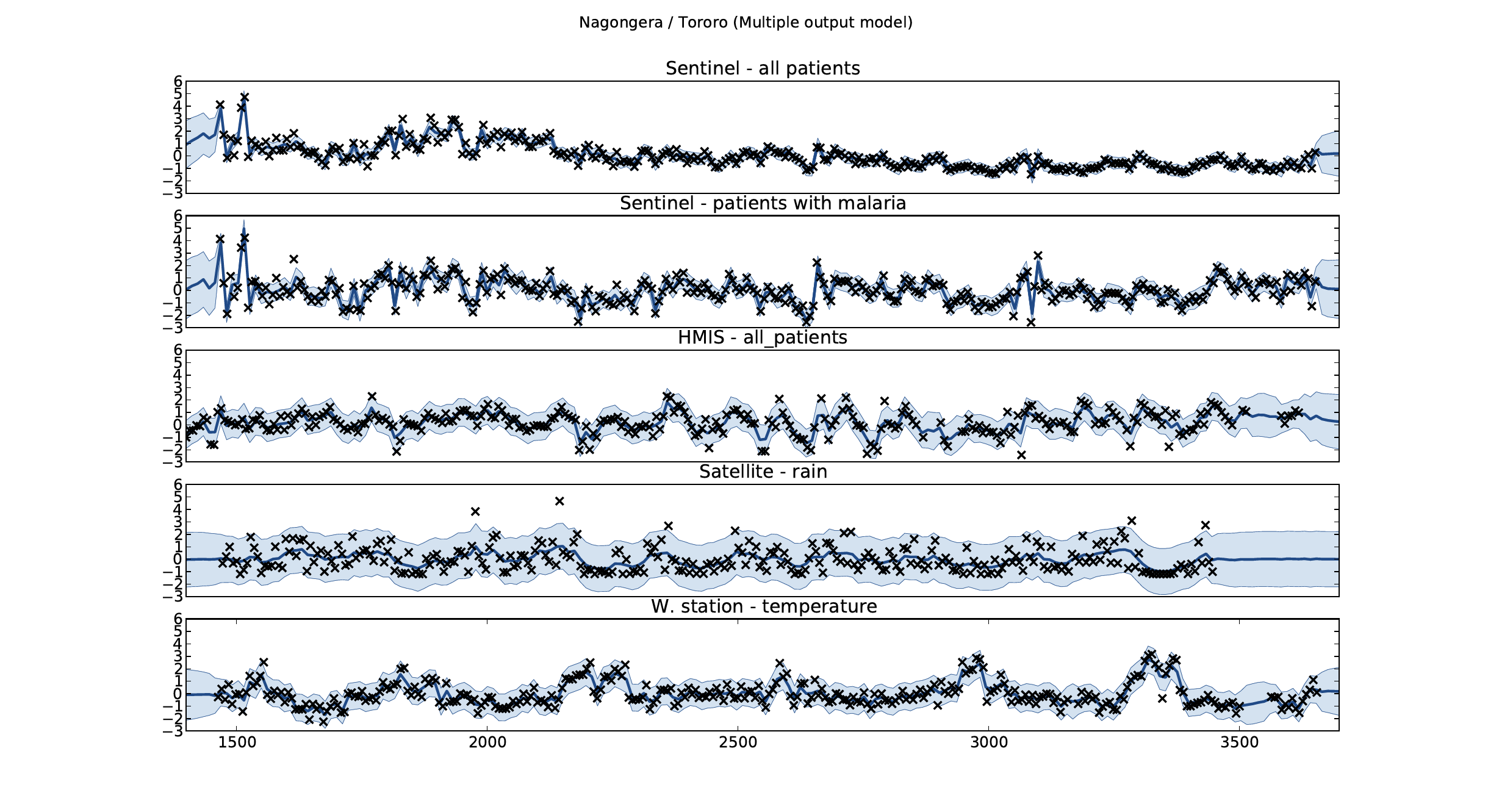

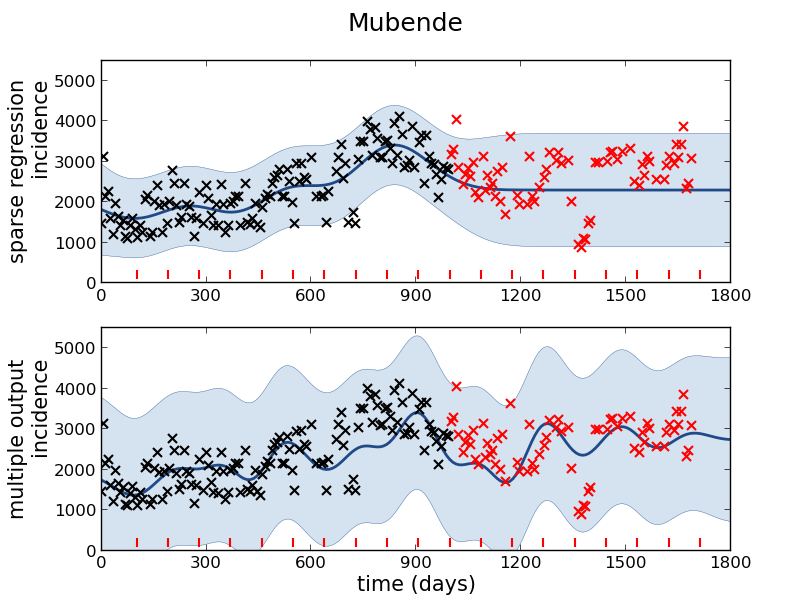

Example: Prediction of Malaria Incidence in Uganda

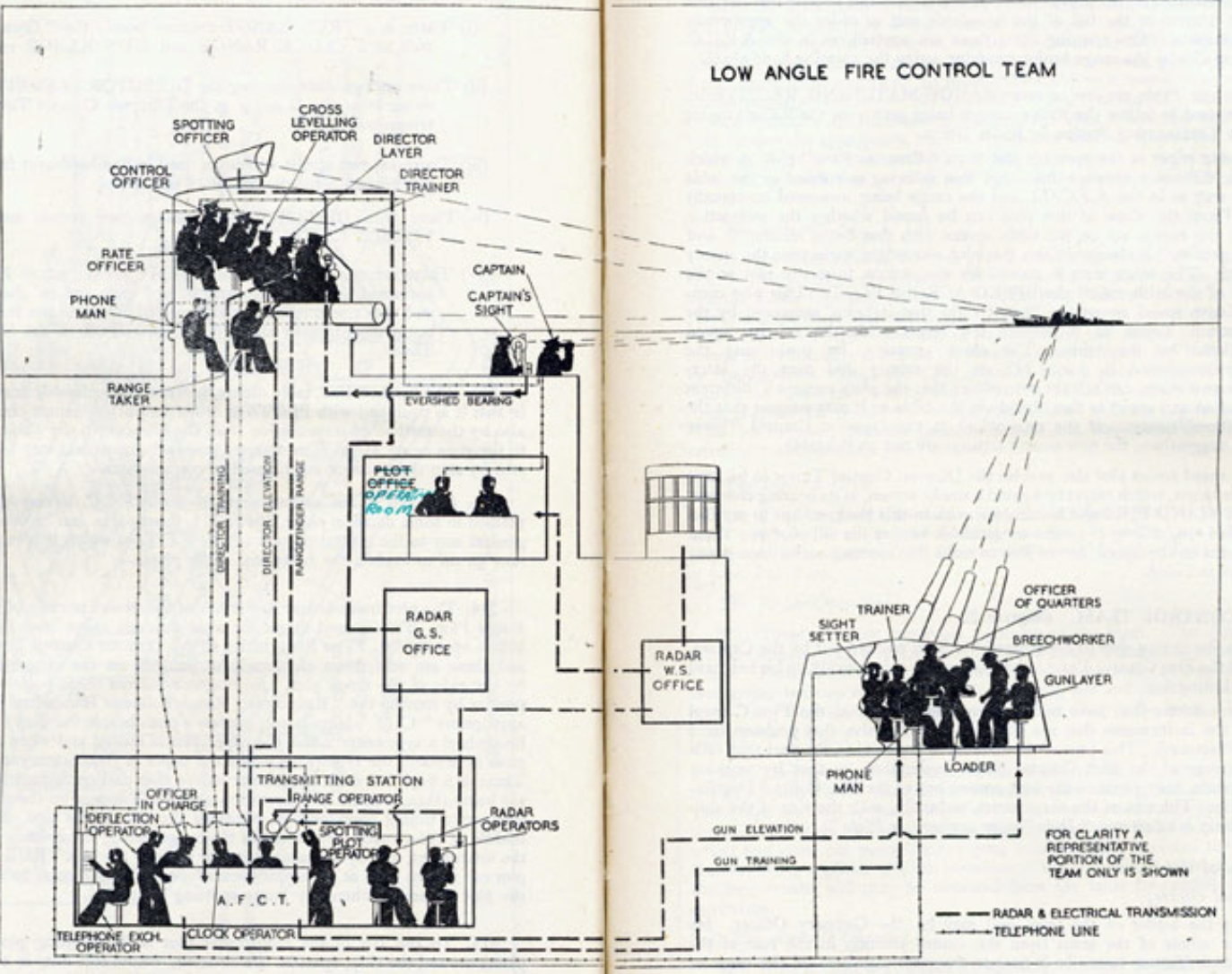

As an example of using Gaussian process models within the full pipeline from data to decsion, we’ll consider the prediction of Malaria incidence in Uganda. For the purposes of this study malaria reports come in two forms, HMIS reports from health centres and Sentinel data, which is curated by the WHO. There are limited sentinel sites and many HMIS sites.

The work is from Ricardo Andrade Pacheco’s PhD thesis, completed in collaboration with John Quinn and Martin Mubangizi (Andrade-Pacheco et al., 2014; Mubangizi et al., 2014). John and Martin were initally from the AI-DEV group from the University of Makerere in Kampala and more latterly they were based at UN Global Pulse in Kampala. You can see the work summarized on the UN Global Pulse disease outbreaks project site here.

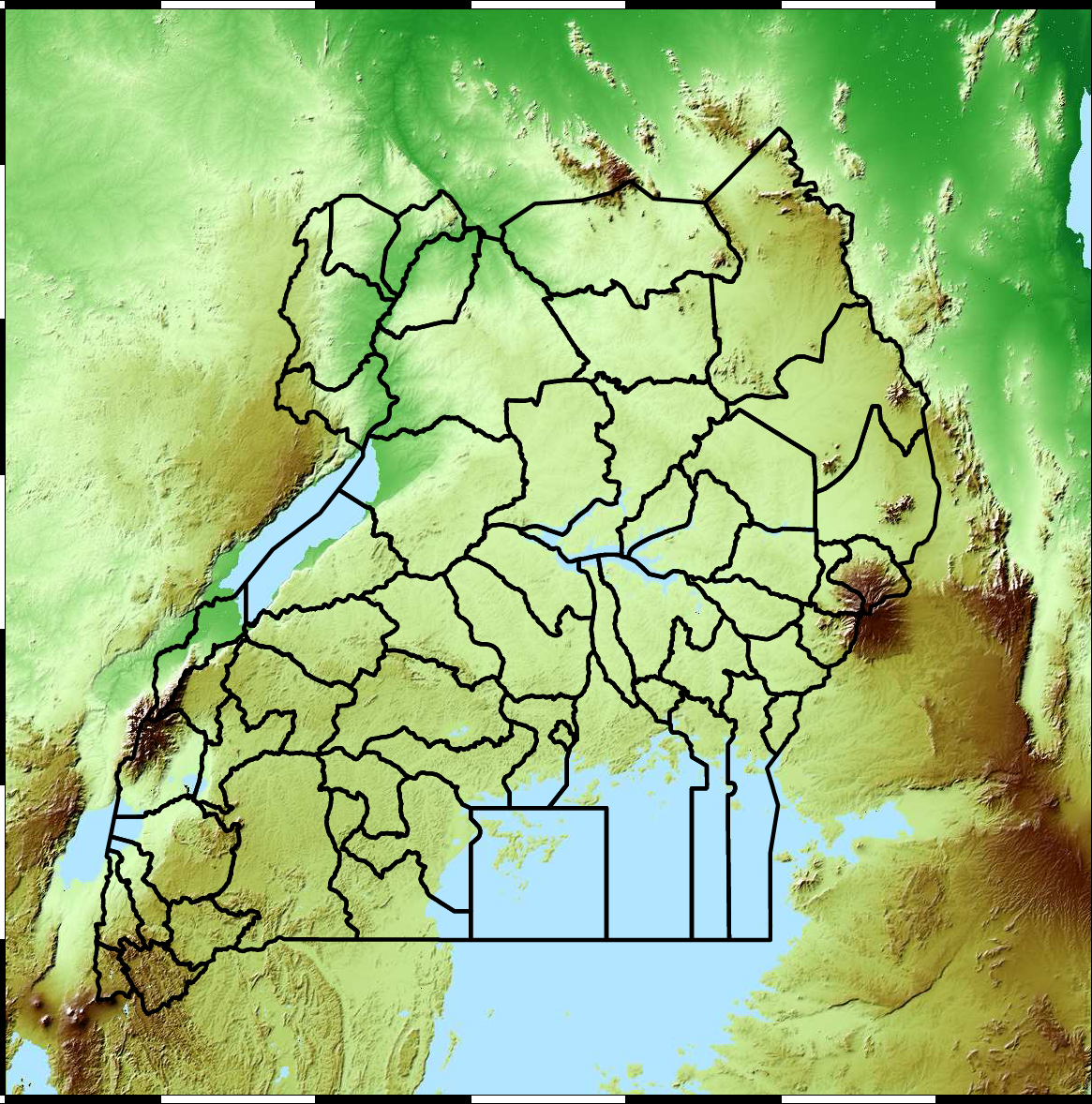

Malaria data is spatial data. Uganda is split into districts, and health reports can be found for each district. This suggests that models such as conditional random fields could be used for spatial modelling, but there are two complexities with this. First of all, occasionally districts split into two. Secondly, sentinel sites are a specific location within a district, such as Nagongera which is a sentinel site based in the Tororo district.

The common standard for collecting health data on the African continent is from the Health management information systems (HMIS). However, this data suffers from missing values (Gething et al., 2006) and diagnosis of diseases like typhoid and malaria may be confounded.

Figure: The Tororo district, where the sentinel site, Nagongera, is located.

World Health Organization Sentinel Surveillance systems are set up “when high-quality data are needed about a particular disease that cannot be obtained through a passive system”. Several sentinel sites give accurate assessment of malaria disease levels in Uganda, including a site in Nagongera.

Figure: Sentinel and HMIS data along with rainfall and temperature for the Nagongera sentinel station in the Tororo district.

In collaboration with the AI Research Group at Makerere we chose to investigate whether Gaussian process models could be used to assimilate information from these two different sources of disease informaton. Further, we were interested in whether local information on rainfall and temperature could be used to improve malaria estimates.

The aim of the project was to use WHO Sentinel sites, alongside rainfall and temperature, to improve predictions from HMIS data of levels of malaria.

Figure: The Mubende District.

Figure: Prediction of malaria incidence in Mubende.

Figure: The project arose out of the Gaussian process summer school held at Makerere in Kampala in 2013. The school led, in turn, to the Data Science Africa initiative.

Early Warning Systems

Figure: The Kabarole district in Uganda.

Figure: Estimate of the current disease situation in the Kabarole district over time. Estimate is constructed with a Gaussian process with an additive covariance funciton.

Health monitoring system for the Kabarole district. Here we have fitted the reports with a Gaussian process with an additive covariance function. It has two components, one is a long time scale component (in red above) the other is a short time scale component (in blue).

Monitoring proceeds by considering two aspects of the curve. Is the blue line (the short term report signal) above the red (which represents the long term trend? If so we have higher than expected reports. If this is the case and the gradient is still positive (i.e. reports are going up) we encode this with a red color. If it is the case and the gradient of the blue line is negative (i.e. reports are going down) we encode this with an amber color. Conversely, if the blue line is below the red and decreasing, we color green. On the other hand if it is below red but increasing, we color yellow.

This gives us an early warning system for disease. Red is a bad situation getting worse, amber is bad, but improving. Green is good and getting better and yellow good but degrading.

Finally, there is a gray region which represents when the scale of the effect is small.

Figure: The map of Ugandan districts with an overview of the Malaria situation in each district.

These colors can now be observed directly on a spatial map of the districts to give an immediate impression of the current status of the disease across the country.

Deep Learning

Classical statistical models and simple machine learning models have a great deal in common. The main difference between the fields is philosophical. Machine learning practitioners are typically more concerned with the quality of prediciton (e.g. measured by ROC curve) while statisticians tend to focus more on the interpretability of the model and the validity of any decisions drawn from that interpretation. For example, a statistical model may be used to validate whether a large scale intervention (such as the mass provision of mosquito nets) has had a long term effect on disease (such as malaria). In this case one of the covariates is likely to be the provision level of nets in a particular region. The response variable would be the rate of malaria disease in the region. The parmaeter, \(\beta_1\) associated with that covariate will demonstrate a positive or negative effect which would be validated in answering the question. The focus in statistics would be less on the accuracy of the response variable and more on the validity of the interpretation of the effect variable, \(\beta_1\).

A machine learning practitioner on the other hand would typically denote the parameter \(w_1\), instead of \(\beta_1\) and would only be interested in the output of the prediction function, \(f(\cdot)\) rather than the parameter itself. The general formalism of the prediction function allows for non-linear models. In machine learning, the emphasis on prediction over interpretability means that non-linear models are often used. The parameters, \(\mathbf{w}\), are a means to an end (good prediction) rather than an end in themselves (interpretable).

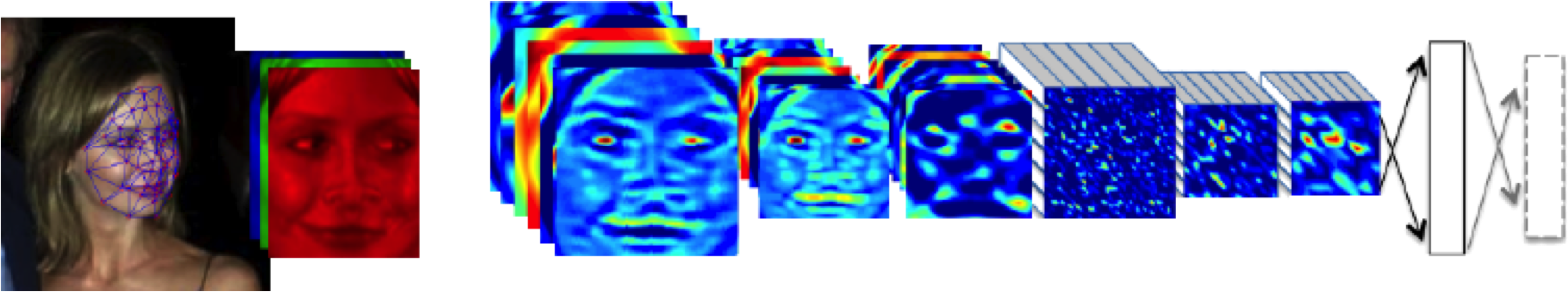

DeepFace

Figure: The DeepFace architecture (Taigman et al., 2014), visualized through colors to represent the functional mappings at each layer. There are 120 million parameters in the model.

The DeepFace architecture (Taigman et al., 2014) consists of layers that deal with translation invariances, known as convolutional layers. These layers are followed by three locally-connected layers and two fully-connected layers. Color illustrates feature maps produced at each layer. The neural network includes more than 120 million parameters, where more than 95% come from the local and fully connected layers.

Deep Learning as Pinball

Figure: Deep learning models are composition of simple functions. We can think of a pinball machine as an analogy. Each layer of pins corresponds to one of the layers of functions in the model. Input data is represented by the location of the ball from left to right when it is dropped in from the top. Output class comes from the position of the ball as it leaves the pins at the bottom.

Sometimes deep learning models are described as being like the brain, or too complex to understand, but one analogy I find useful to help the gist of these models is to think of them as being similar to early pin ball machines.

In a deep neural network, we input a number (or numbers), whereas in pinball, we input a ball.

Think of the location of the ball on the left-right axis as a single number. Our simple pinball machine can only take one number at a time. As the ball falls through the machine, each layer of pins can be thought of as a different layer of ‘neurons’. Each layer acts to move the ball from left to right.

In a pinball machine, when the ball gets to the bottom it might fall into a hole defining a score, in a neural network, that is equivalent to the decision: a classification of the input object.

An image has more than one number associated with it, so it is like playing pinball in a hyper-space.

Figure: At initialization, the pins, which represent the parameters of the function, aren’t in the right place to bring the balls to the correct decisions.

Figure: After learning the pins are now in the right place to bring the balls to the correct decisions.

Learning involves moving all the pins to be in the correct position, so that the ball ends up in the right place when it’s fallen through the machine. But moving all these pins in hyperspace can be difficult.

In a hyper-space you have to put a lot of data through the machine for to explore the positions of all the pins. Even when you feed many millions of data points through the machine, there are likely to be regions in the hyper-space where no ball has passed. When future test data passes through the machine in a new route unusual things can happen.

Adversarial examples exploit this high dimensional space. If you have access to the pinball machine, you can use gradient methods to find a position for the ball in the hyper space where the image looks like one thing, but will be classified as another.

Probabilistic methods explore more of the space by considering a range of possible paths for the ball through the machine. This helps to make them more data efficient and gives some robustness to adversarial examples.

What are Large Language Models?

Probability Conversations

Figure: The focus so far has been on reducing uncertainty to a few representative values and sharing numbers with human beings. We forget that most people can be confused by basic probabilities for example the prosecutor’s fallacy.

In practice we know that probabilities can be very unintuitive, for example in court there is a fallacy known as the “prosecutor’s fallacy” that confuses conditional probabilities. This can cause problems in jury trials (Thompson, 1989).

What are Large Language Models?

The MONIAC

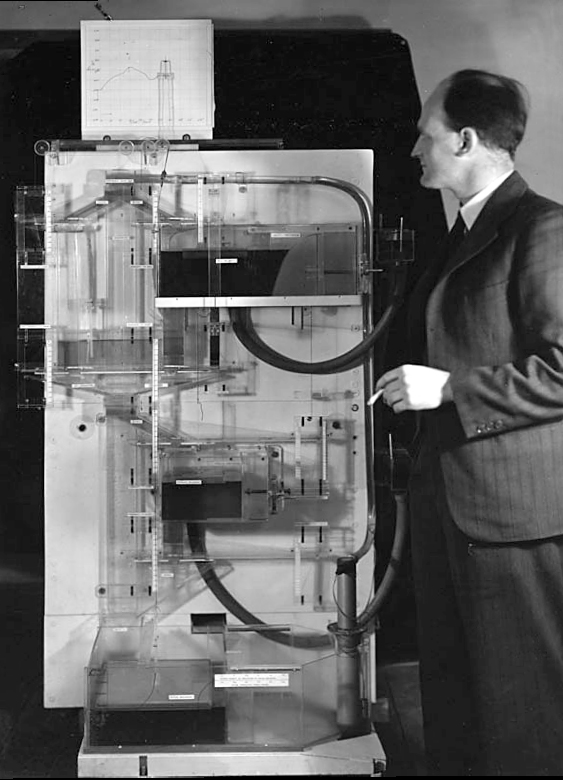

The MONIAC was an analogue computer designed to simulate the UK economy. Analogue comptuers work through analogy, the analogy in the MONIAC is that both money and water flow. The MONIAC exploits this through a system of tanks, pipes, valves and floats that represent the flow of money through the UK economy. Water flowed from the treasury tank at the top of the model to other tanks representing government spending, such as health and education. The machine was initially designed for teaching support but was also found to be a useful economic simulator. Several were built and today you can see the original at Leeds Business School, there is also one in the London Science Museum and one in the Unisversity of Cambridge’s economics faculty.

Figure: Bill Phillips and his MONIAC (completed in 1949). The machine is an analogue computer designed to simulate the workings of the UK economy.

See Lawrence (2024) MONIAC p. 232-233, 266, 343.

But if we can avoid the pitfalls of counterfeit people, this also offers us an opportunity to psychologically represent (Heider:interpersonal58?) the machine in a manner where humans can communicate without special training. This in turn offers the opportunity to overcome the challenge of intellectual debt.

Despite the lack of interpretability of machine learning models, they allow us access to what the machine is doing in a way that bypasses many of the traditional techniques developed in statistics. But understanding this new route for access is a major new challenge.

HAM

The Human-Analogue Machine or HAM therefore provides a route through which we could better understand our world through improving the way we interact with machines.

Figure: The trinity of human, data, and computer, and highlights the modern phenomenon. The communication channel between computer and data now has an extremely high bandwidth. The channel between human and computer and the channel between data and human is narrow. New direction of information flow, information is reaching us mediated by the computer. The focus on classical statistics reflected the importance of the direct communication between human and data. The modern challenges of data science emerge when that relationship is being mediated by the machine.

The HAM can provide an interface between the digital computer and the human allowing humans to work closely with computers regardless of their understandin gf the more technical parts of software engineering.

Figure: The HAM now sits between us and the traditional digital computer.

Of course this route provides new routes for manipulation, new ways in which the machine can undermine our autonomy or exploit our cognitive foibles. The major challenge we face is steering between these worlds where we gain the advantage of the computer’s bandwidth without undermining our culture and individual autonomy.

See Lawrence (2024) human-analogue machine (HAMs) p. 343-347, 359-359, 365-368.

Complexity in Action

As an exercise in understanding complexity, watch the following video. You will see the basketball being bounced around, and the players moving. Your job is to count the passes of those dressed in white and ignore those of the individuals dressed in black.

Figure: Daniel Simon’s famous illusion “monkey business”. Focus on the movement of the ball distracts the viewer from seeing other aspects of the image.

In a classic study Simons and Chabris (1999) ask subjects to count the number of passes of the basketball between players on the team wearing white shirts. Fifty percent of the time, these subjects don’t notice the gorilla moving across the scene.

The phenomenon of inattentional blindness is well known, e.g in their paper Simons and Charbris quote the Hungarian neurologist, Rezsö Bálint,

It is a well-known phenomenon that we do not notice anything happening in our surroundings while being absorbed in the inspection of something; focusing our attention on a certain object may happen to such an extent that we cannot perceive other objects placed in the peripheral parts of our visual field, although the light rays they emit arrive completely at the visual sphere of the cerebral cortex.

Rezsö Bálint 1907 (translated in Husain and Stein 1988, page 91)

When we combine the complexity of the world with our relatively low bandwidth for information, problems can arise. Our focus on what we perceive to be the most important problem can cause us to miss other (potentially vital) contextual information.

This phenomenon is known as selective attention or ‘inattentional blindness’.

Figure: For a longer talk on inattentional bias from Daniel Simons see this video.

Data Selective Attention Bias

We are going to see how inattention biases can play out in data analysis by going through a simple example. The analysis involves body mass index and activity information.

BMI Steps Data

The BMI Steps example is taken from Yanai and Lercher (2020). We are given a data set of body-mass index measurements against step counts. For convenience we have packaged the data so that it can be easily downloaded.

import podsdata = pods.datasets.bmi_steps()

X = data['X']

y = data['Y']It is good practice to give our variables interpretable names so that

the analysis may be clearly understood by others. Here the

steps count is the first dimension of the covariate, the

bmi is the second dimension and the gender is

stored in y with 1 for female and

0 for male.

steps = X[:, 0]

bmi = X[:, 1]

gender = y[:, 0]We can check the mean steps and the mean of the BMI.

print('Steps mean is {mean}.'.format(mean=steps.mean()))print('BMI mean is {mean}.'.format(mean=bmi.mean()))BMI Steps Data Analysis

We can also separate out the means from the male and female populations. In python this can be done by setting male and female indices as follows.

male_ind = (gender==0)

female_ind = (gender==1)And now we can extract the variables for the two populations.

male_steps = steps[male_ind]

male_bmi = bmi[male_ind]And as before we compute the mean.

print('Male steps mean is {mean}.'.format(mean=male_steps.mean()))print('Male BMI mean is {mean}.'.format(mean=male_bmi.mean()))Similarly, we can get the same result for the female portion of the populaton.

female_steps = steps[female_ind]

female_bmi = bmi[female_ind]print('Female steps mean is {mean}.'.format(mean=female_steps.mean()))print('Female BMI mean is {mean}.'.format(mean=female_bmi.mean()))Interesting, the female BMI average is slightly higher than the male BMI average. The number of steps in the male group is higher than that in the female group. Perhaps the steps and the BMI are anti-correlated. The more steps, the lower the BMI.

Python provides a statistics package. We’ll import this in

python so that we can try and understand the correlation

between the steps and the BMI.

from scipy.stats import pearsonrcorr, _ = pearsonr(steps, bmi)

print("Pearson's overall correlation: {corr}".format(corr=corr))

male_corr, _ = pearsonr(male_steps, male_bmi)

print("Pearson's correlation for males: {corr}".format(corr=male_corr))

female_corr, _ = pearsonr(female_steps, female_bmi)

print("Pearson's correlation for females: {corr}".format(corr=female_corr))A Hypothesis as a Liability

This analysis is from an article titled “A Hypothesis as a Liability” (Yanai and Lercher, 2020), they start their article with the following quite from Herman Hesse.

“ ‘When someone seeks,’ said Siddhartha, ‘then it easily happens that his eyes see only the thing that he seeks, and he is able to find nothing, to take in nothing. […] Seeking means: having a goal. But finding means: being free, being open, having no goal.’ ”

Hermann Hesse

Their idea is that having a hypothesis can constrain our thinking. However, in answer to their paper Felin et al. (2021) argue that some form of hypothesis is always necessary, suggesting that a hypothesis can be a liability

My view is captured in the introductory chapter to an edited volume on computational systems biology that I worked on with Mark Girolami, Magnus Rattray and Guido Sanguinetti.

Figure: Quote from Lawrence (2010) highlighting the importance of interaction between data and hypothesis.

Popper nicely captures the interaction between hypothesis and data by relating it to the chicken and the egg. The important thing is that these two co-evolve.

Number Theatre

Unfortunately, we don’t always have time to wait for this process to converge to an answer we can all rely on before a decision is required.

Not only can we be misled by data before a decision is made, but sometimes we can be misled by data to justify the making of a decision. David Spiegelhalter refers to the phenomenon of “Number Theatre” in a conversation with Andrew Marr from May 2020 on the presentation of data.

Figure: Professor Sir David Spiegelhalter on Andrew Marr on 10th May 2020 speaking about some of the challengers around data, data presentation, and decision making in a pandemic. David mentions number theatre at 9 minutes 10 seconds.

Data Theatre

Data Theatre exploits data inattention bias to present a particular view on events that may misrepresents through selective presentation. Statisticians are one of the few groups that are trained with a sufficient degree of data skepticism. But it can also be combatted through ensuring there are domain experts present, and that they can speak freely.

Figure: The phenomenon of number theatre or data theatre was described by David Spiegelhalter and is nicely summarized by Martin Robbins in this sub-stack article https://martinrobbins.substack.com/p/data-theatre-why-the-digital-dashboards.

Sir David Spiegelhalter

The statistician’s craft is based on humility in front of data and developing the appropriate skeptical thinking around conclusions from data. The best individual I’ve seen at conveying and developing that sense is Sir David Spiegelhalter.

The Art of Statistics

Figure: The Art of Statistics by David Spiegelhalter is an excellent read on the pitfalls of data interpretation.

The Art of Statistics (Spiegelhalter, 2019) brings important examples from statistics to life in an intelligent and entertaining way. It is highly readable and gives an opportunity to fast-track towards the important skill of data-skepticism that is the mark of a professional statistician.

The Art of Uncertainty

David has also released a new book that focusses on Uncertainty.

See (Spiegelhalter, 2024)

In today’s lecture we’ve drilled down further on a difficult aspect of data science. By focusing too much on the data and the technical challenges we face, we can forget the context. But to do data science well, we must not forget the context of the data. We need to pay attention to domain experts and introduce their understanding to our analysis. Above all we must not forget that data is almost always (in the end) about people.

Further Reading

- Section 5.2.2 up to pg 182 of Rogers and Girolami (2011)

References

Thanks!

For more information on these subjects and more you might want to check the following resources.

- book: The Atomic Human

- twitter: @lawrennd

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com