AI and Data Science

LT2, William Gates Building

Introduction

Statistics to Deep Learning

\[\text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\]

From Model to Decision

|

\[\text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\] |

Classical Statistical Analysis

- Remain more important than ever.

- Provide sanity checks for our ideas and code.

- Enable us to visualize our analysis bugs.

What is Machine Learning?

What is Machine Learning?

\[ \text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\]

- data : observations, could be actively or passively acquired (meta-data).

- model : assumptions, based on previous experience (other data! transfer learning etc), or beliefs about the regularities of the universe. Inductive bias.

- prediction : an action to be taken or a categorization or a quality score.

- Royal Society Report: Machine Learning: Power and Promise of Computers that Learn by Example

What is Machine Learning?

\[\text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\]

- To combine data with a model need:

- a prediction function \(f(\cdot)\) includes our beliefs about the regularities of the universe

- an objective function \(E(\cdot)\) defines the cost of misprediction.

Machine Learning

- Driver of two different domains:

- Data Science: arises from the fact that we now capture data by happenstance.

- Artificial Intelligence: emulation of human behaviour.

- Connection: Internet of Things

Machine Learning

- Driver of two different domains:

- Data Science: arises from the fact that we now capture data by happenstance.

- Artificial Intelligence: emulation of human behaviour.

- Connection: Internet of

Things

Machine Learning

- Driver of two different domains:

- Data Science: arises from the fact that we now capture data by happenstance.

- Artificial Intelligence: emulation of human behaviour.

- Connection: Internet of People

What does Machine Learning do?

- ML Automates through Data

- Strongly related to statistics.

- Field underpins revolution in data science and AI

- With AI:

- logic, robotics, computer vision, language, speech

- With Data Science:

- databases, data mining, statistics, visualization, software systems

What does Machine Learning do?

- Automation scales by codifying processes and automating them.

- Need:

- Interconnected components

- Compatible components

- Early examples:

- cf Colt 45, Ford Model T

Codify Through Mathematical Functions

- How does machine learning work?

- Jumper (jersey/sweater) purchase with logistic regression

\[ \text{odds} = \frac{p(\text{bought})}{p(\text{not bought})} \]

\[ \log \text{odds} = w_0 + w_1 \text{age} + w_2 \text{latitude}.\]

Sigmoid Function

Codify Through Mathematical Functions

- How does machine learning work?

- Jumper (jersey/sweater) purchase with logistic regression

\[ p(\text{bought}) = \sigma\left(w_0 + w_1 \text{age} + w_2 \text{latitude}\right).\]

Codify Through Mathematical Functions

- How does machine learning work?

- Jumper (jersey/sweater) purchase with logistic regression

\[ p(\text{bought}) = \sigma\left(\mathbf{ w}^\top \mathbf{ x}\right).\]

Codify Through Mathematical Functions

- How does machine learning work?

- Jumper (jersey/sweater) purchase with logistic regression

\[ y= f\left(\mathbf{ x}, \mathbf{ w}\right).\]

We call \(f(\cdot)\) the prediction function.

Fit to Data

- Use an objective function

\[E(\mathbf{ w}, \mathbf{Y}, \mathbf{X})\]

- E.g. least squares \[E(\mathbf{ w}, \mathbf{Y}, \mathbf{X}) = \sum_{i=1}^n\left(y_i - f(\mathbf{ x}_i, \mathbf{ w})\right)^2.\]

Two Components

- Prediction function, \(f(\cdot)\)

- Objective function, \(E(\cdot)\)

Prediction vs Interpretation

\[ p(\text{bought}) = \sigma\left(w_0 + w_1 \text{age} + w_2 \text{latitude}\right).\]

\[ p(\text{bought}) = \sigma\left(\beta_0 + \beta_1 \text{age} + \beta_2 \text{latitude}\right).\]

Example: Prediction of Malaria Incidence in Uganda

- Work with Ricardo Andrade Pacheco, John Quinn and Martin Mubangizi (Makerere University, Uganda)

- See AI-DEV Group.

- See UN Global Pulse Disease Outbreaks Site

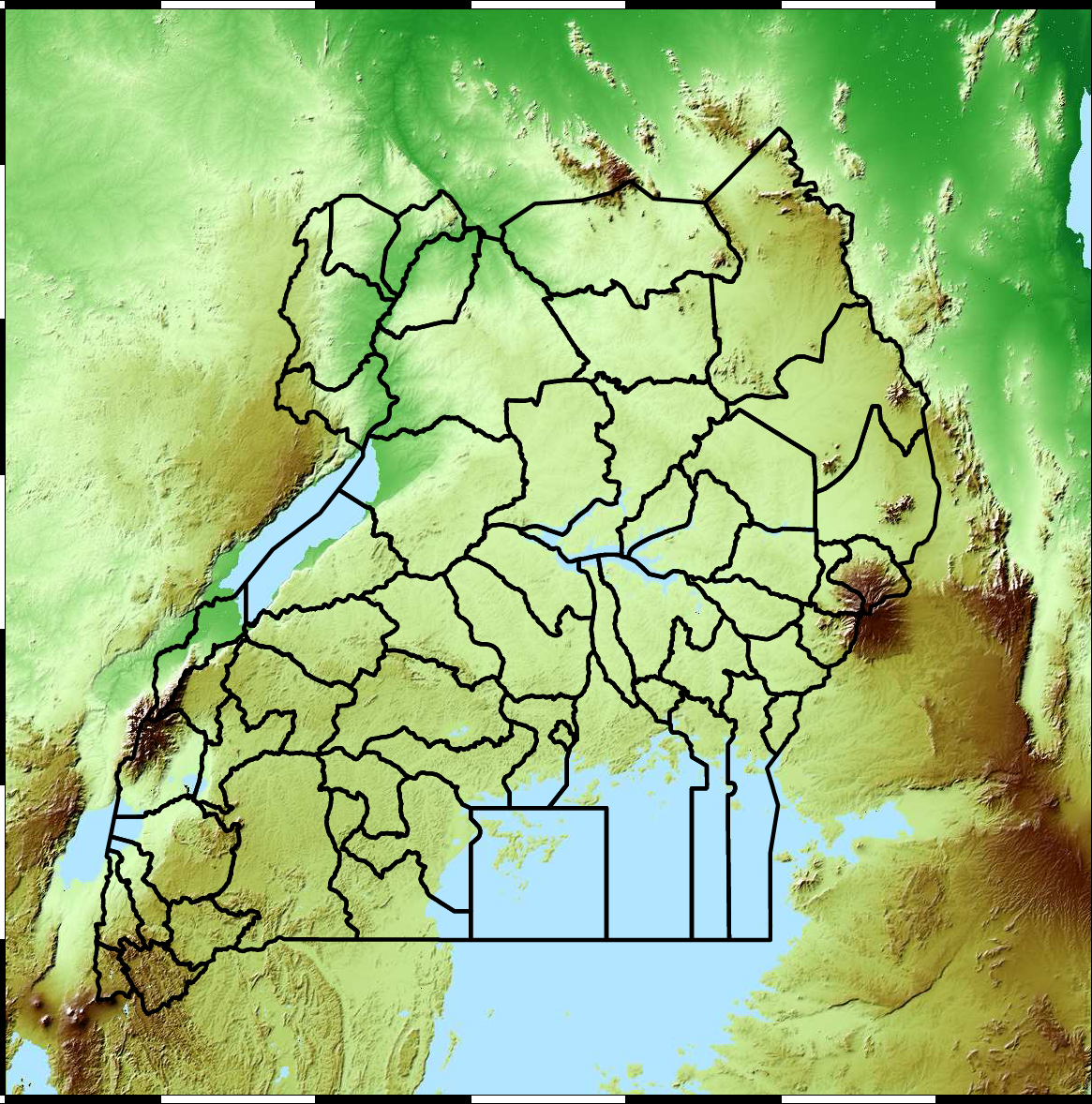

Malaria Prediction in Uganda

Tororo District

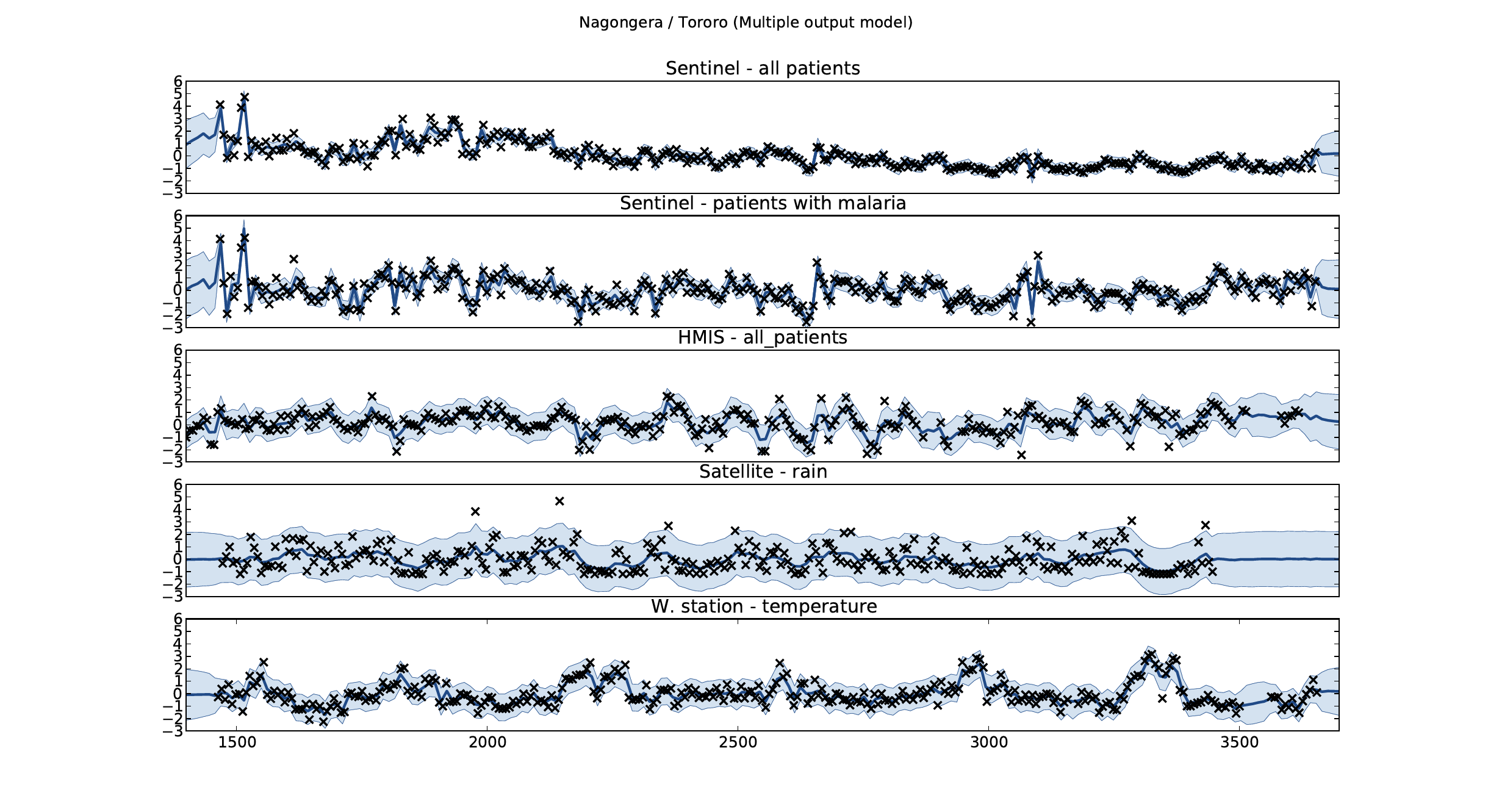

Malaria Prediction in Nagongera (Sentinel Site)

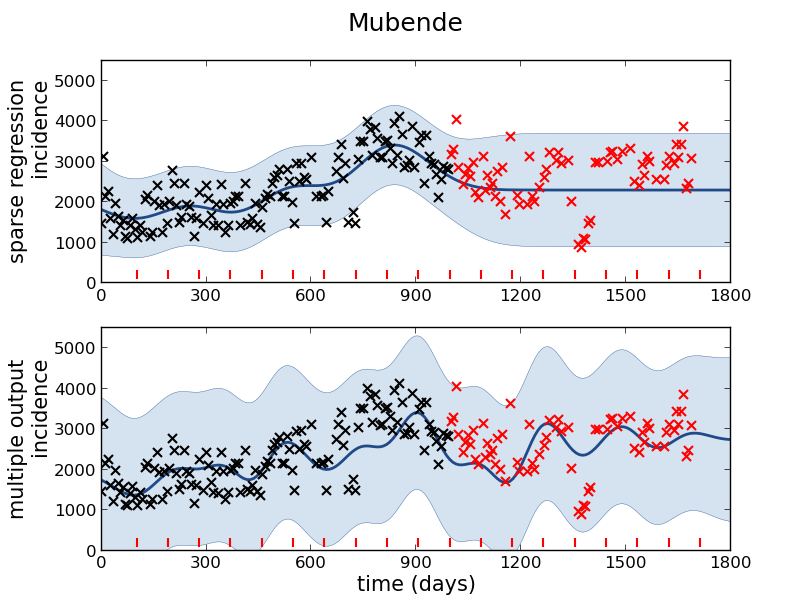

Mubende District

Malaria Prediction in Uganda

GP School at Makerere

Kabarole District

Early Warning System

Early Warning Systems

Deep Learning

Deep Learning

These are interpretable models: vital for disease modeling etc.

Modern machine learning methods are less interpretable

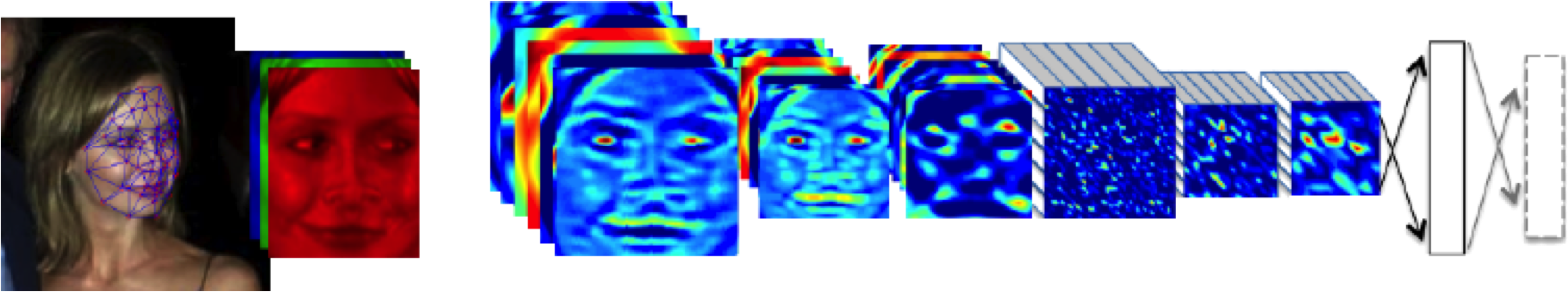

Example: face recognition

Outline of the DeepFace architecture. A front-end of a single convolution-pooling-convolution filtering on the rectified input, followed by three locally-connected layers and two fully-connected layers. Color illustrates feature maps produced at each layer. The net includes more than 120 million parameters, where more than 95% come from the local and fully connected.

What are Large Language Models?

In practice …

There is a lot of evidence that probabilities aren’t interpretable.

See e.g. Thompson (1989)

What are Large Language Models?

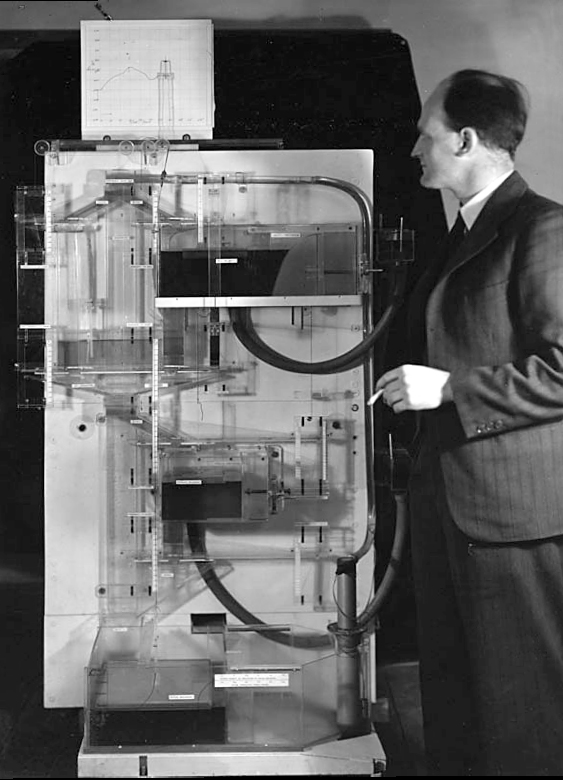

The MONIAC

In practice …

LLMs are already being used for robot planning Huang et al. (2023)

Ambiguities are reduced when the machine has had large scale access to human cultural understanding.

Inner Monologue

HAM

Networked Interactions

Complexity in Action

Data Selective Attention Bias

A Hypothesis as a Liability

“ ‘When someone seeks,’ said Siddhartha, ‘then it easily happens that his eyes see only the thing that he seeks, and he is able to find nothing, to take in nothing. […] Seeking means: having a goal. But finding means: being free, being open, having no goal.’ ”

Hermann Hesse

The Scientific Process

Number Theatre

Data Theatre

Sir David Spiegelhalter

The Art of Statistics

The Art of Uncertainty

- By focussing on the technical side of data science

- We tend to forget about the context of the data.

- Don’t forget that data is almost always about people.

References

Thanks!

book: The Atomic Human

twitter: @lawrennd

The Atomic Human pages MONIAC 232-233, 266, 343 , human-analogue machine (HAMs) 343-347, 359-359, 365-368.

podcast: The Talking Machines

newspaper: Guardian Profile Page

blog posts: