Unsupervised Learning with Gaussian Processes

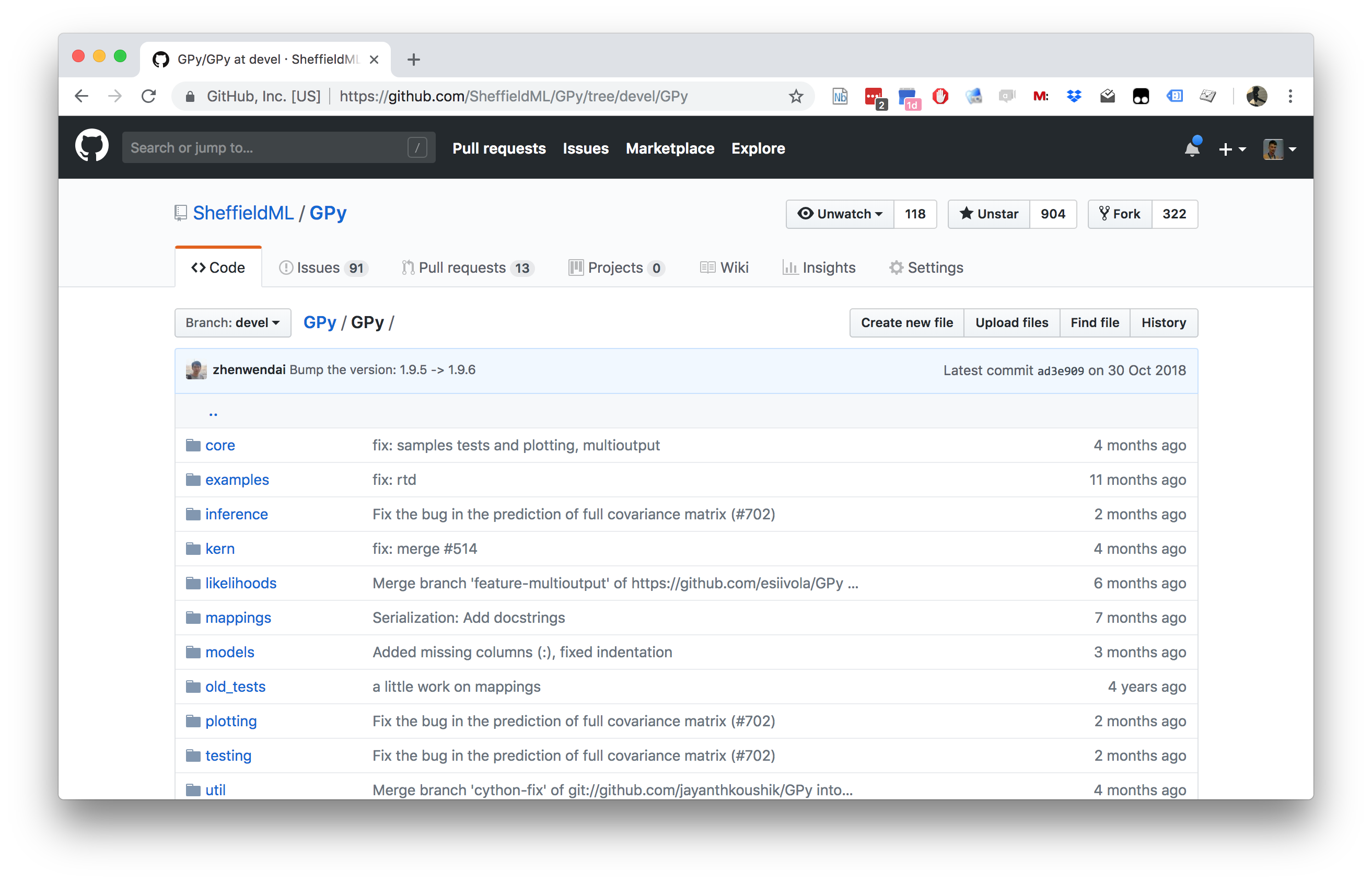

GPy: A Gaussian Process Framework in Python

GPy: A Gaussian Process Framework in Python

- BSD Licensed software base.

- Wide availability of libraries, ‘modern’ scripting language.

- Allows us to set projects to undergraduates in Comp Sci that use GPs.

- Available through GitHub https://github.com/SheffieldML/GPy

- Reproducible Research with Jupyter Notebook.

Features

- Probabilistic-style programming (specify the model, not the algorithm).

- Non-Gaussian likelihoods.

- Multivariate outputs.

- Dimensionality reduction.

- Approximations for large data sets.

Difficulty for Probabilistic Approaches

Propagate a probability distribution through a non-linear mapping.

Normalisation of distribution becomes intractable.

Difficulty for Probabilistic Approaches

Propagate a probability distribution through a non-linear mapping.

Normalisation of distribution becomes intractable.

Difficulty for Probabilistic Approaches

Propagate a probability distribution through a non-linear mapping.

Normalisation of distribution becomes intractable.

Getting Started and Downloading Data

Principal Component Analysis

- What is the right shape \(n\times p\) to use?

Gaussian Process Latent Variable Model

CMU Mocap Database

CMU Motion Capture GP-LVM

CMU Mocap Visualisation

Example: Latent Doodle Space

Example: Latent Doodle Space

Generalization with much less Data than Dimensions

Powerful uncertainly handling of GPs leads to surprising properties.

Non-linear models can be used where there are fewer data points than dimensions without overfitting.

(Baxter and Anjyo,

2006)

Example: Continuous Character Control

- Graph diffusion prior for enforcing connectivity between motions. \[\log p(\mathbf{X}) = w_c \sum_{i,j} \log K_{ij}^d\] with the graph diffusion kernel \(\mathbf{K}^d\) obtain from \[K_{ij}^d = \exp(\beta \mathbf{H}) \qquad \text{with} \qquad \mathbf{H} = -\mathbf{T}^{-1/2} \mathbf{L} \mathbf{T}^{-1/2}\] the graph Laplacian, and \(\mathbf{T}\) is a diagonal matrix with \(T_{ii} = \sum_j w(\mathbf{ x}_i, \mathbf{ x}_j)\), \[L_{ij} = \begin{cases} \sum_k w(\mathbf{ x}_i,\mathbf{ x}_k) & \text{if $i=j$} \\ -w(\mathbf{ x}_i,\mathbf{ x}_j) &\text{otherwise.} \end{cases}\] and \(w(\mathbf{ x}_i,\mathbf{ x}_j) = || \mathbf{ x}_i - \mathbf{ x}_j||^{-p}\) measures similarity.

Levine et al.

(2012)

Character Control: Results

Data for Blastocyst Development in Mice: Single Cell TaqMan Arrays

Principal Component Analysis

PCA Result

GP-LVM on the Data

Blastocyst Data: Isomap

Blastocyst Data: Locally Linear Embedding

Thanks!

- twitter: @lawrennd

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com

References

Baxter, W.V., Anjyo, K.-I., 2006. Latent doodle space, in: EUROGRAPHICS.

Vienna, Austria, pp. 477–485. https://doi.org/10.1111/j.1467-8659.2006.00967.x

Guo, G., Huss, M., Tong, G.Q., Wang, C., Sun, L.L., Clarke, N.D.,

Robsonemail, P., 2010. Resolution of cell fate decisions revealed by

single-cell gene expression analysis from zygote to blastocyst.

Developmental Cell 18, 675–685. https://doi.org/10.1016/j.devcel.2010.02.012

Levine, S., Wang, J.M., Haraux, A., Popović, Z., Koltun, V., 2012.

Continuous character control with low-dimensional embeddings. ACM

Transactions on Graphics (SIGGRAPH 2012) 31.