The Challenges of Data Science

LT2, William Gates Building

Introduction

Data Science as Debugging

- Analogies: For Software Engineers describe data science as debugging.

80/20 in Data Science

- Anecdotally for a given challenge

- 80% of time is spent on data wrangling.

- 20% of time spent on modelling.

- Many companies employ ML Engineers focussing on models not data.

Lessons

- When you begin an analysis behave as a debugger

- Write test code as you go.

- document tests … make them accessible.

- Be constantly skeptical.

- Develop deep understanding of best tools.

- Share your experience of challenges, have others review work

Lessons

- When managing a data science process.

- Don’t deploy standard agile development. Explore modifications e.g. Kanban

- Don’t leave data scientist alone to wade through mess.

- Integrate the data analysis with other team activities

- Have software engineers and domain experts work closely with data scientists

Statistics to Deep Learning

\[\text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\]

From Model to Decision

|

\[\text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\] |

Classical Statistical Analysis

- Remain more important than ever.

- Provide sanity checks for our ideas and code.

- Enable us to visualize our analysis bugs.

What is Machine Learning?

What is Machine Learning?

\[ \text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\]

- data : observations, could be actively or passively acquired (meta-data).

- model : assumptions, based on previous experience (other data! transfer learning etc), or beliefs about the regularities of the universe. Inductive bias.

- prediction : an action to be taken or a categorization or a quality score.

- Royal Society Report: Machine Learning: Power and Promise of Computers that Learn by Example

What is Machine Learning?

\[\text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\]

- To combine data with a model need:

- a prediction function \(f(\cdot)\) includes our beliefs about the regularities of the universe

- an objective function \(E(\cdot)\) defines the cost of misprediction.

Machine Learning

- Driver of two different domains:

- Data Science: arises from the fact that we now capture data by happenstance.

- Artificial Intelligence: emulation of human behaviour.

- Connection: Internet of Things

Machine Learning

- Driver of two different domains:

- Data Science: arises from the fact that we now capture data by happenstance.

- Artificial Intelligence: emulation of human behaviour.

- Connection: Internet of

Things

Machine Learning

- Driver of two different domains:

- Data Science: arises from the fact that we now capture data by happenstance.

- Artificial Intelligence: emulation of human behaviour.

- Connection: Internet of People

What does Machine Learning do?

- ML Automates through Data

- Strongly related to statistics.

- Field underpins revolution in data science and AI

- With AI:

- logic, robotics, computer vision, language, speech

- With Data Science:

- databases, data mining, statistics, visualization, software systems

What does Machine Learning do?

- Automation scales by codifying processes and automating them.

- Need:

- Interconnected components

- Compatible components

- Early examples:

- cf Colt 45, Ford Model T

Codify Through Mathematical Functions

- How does machine learning work?

- Jumper (jersey/sweater) purchase with logistic regression

\[ \text{odds} = \frac{p(\text{bought})}{p(\text{not bought})} \]

\[ \log \text{odds} = w_0 + w_1 \text{age} + w_2 \text{latitude}.\]

Sigmoid Function

Codify Through Mathematical Functions

- How does machine learning work?

- Jumper (jersey/sweater) purchase with logistic regression

\[ p(\text{bought}) = \sigma\left(w_0 + w_1 \text{age} + w_2 \text{latitude}\right).\]

Codify Through Mathematical Functions

- How does machine learning work?

- Jumper (jersey/sweater) purchase with logistic regression

\[ p(\text{bought}) = \sigma\left(\mathbf{ w}^\top \mathbf{ x}\right).\]

Codify Through Mathematical Functions

- How does machine learning work?

- Jumper (jersey/sweater) purchase with logistic regression

\[ y= f\left(\mathbf{ x}, \mathbf{ w}\right).\]

We call \(f(\cdot)\) the prediction function.

Fit to Data

- Use an objective function

\[E(\mathbf{ w}, \mathbf{Y}, \mathbf{X})\]

- E.g. least squares \[E(\mathbf{ w}, \mathbf{Y}, \mathbf{X}) = \sum_{i=1}^n\left(y_i - f(\mathbf{ x}_i, \mathbf{ w})\right)^2.\]

Two Components

- Prediction function, \(f(\cdot)\)

- Objective function, \(E(\cdot)\)

Prediction vs Interpretation

\[ p(\text{bought}) = \sigma\left(w_0 + w_1 \text{age} + w_2 \text{latitude}\right).\]

\[ p(\text{bought}) = \sigma\left(\beta_0 + \beta_1 \text{age} + \beta_2 \text{latitude}\right).\]

Example: Prediction of Malaria Incidence in Uganda

- Work with Ricardo Andrade Pacheco, John Quinn and Martin Mubangizi (Makerere University, Uganda)

- See AI-DEV Group.

- See UN Global Pulse Disease Outbreaks Site

Malaria Prediction in Uganda

Tororo District

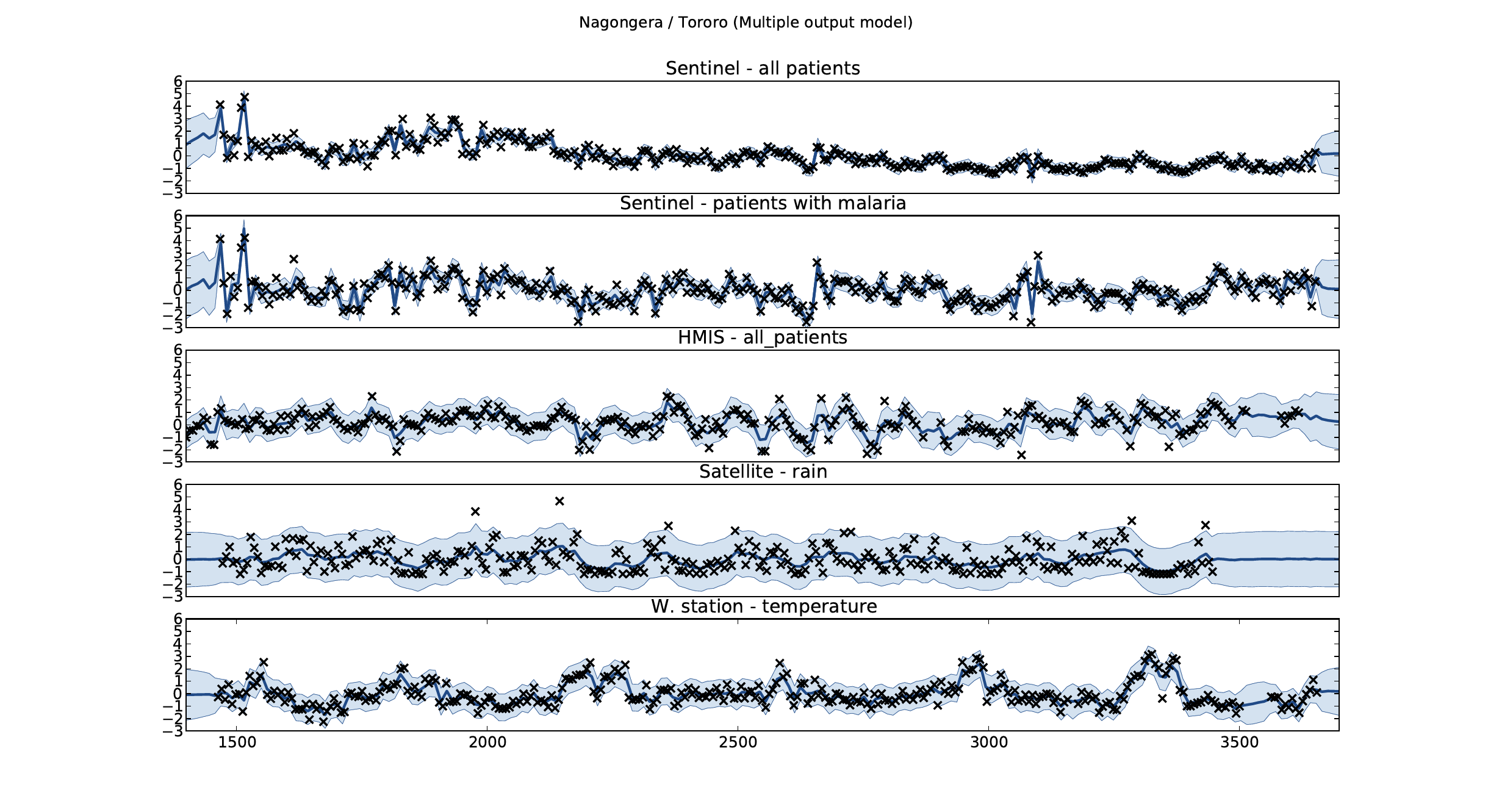

Malaria Prediction in Nagongera (Sentinel Site)

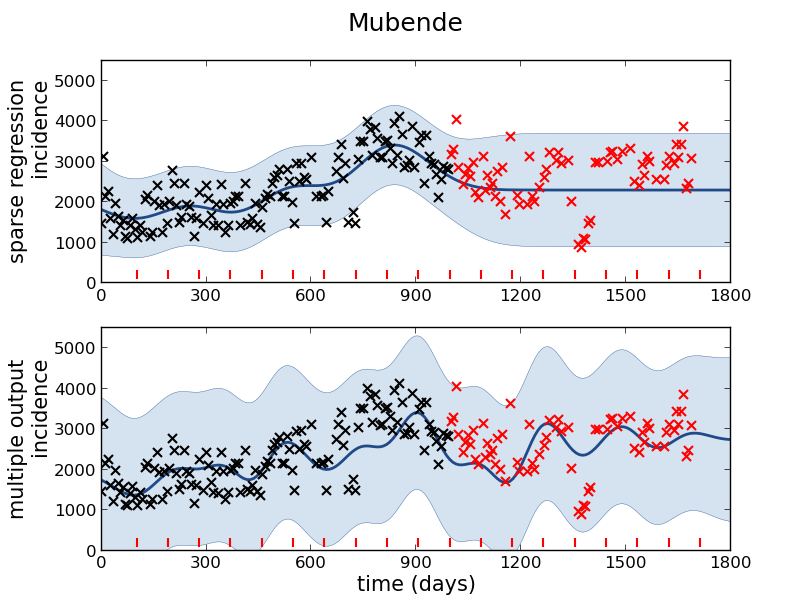

Mubende District

Malaria Prediction in Uganda

GP School at Makerere

Kabarole District

Early Warning System

Early Warning Systems

Deep Learning

Deep Learning

These are interpretable models: vital for disease modeling etc.

Modern machine learning methods are less interpretable

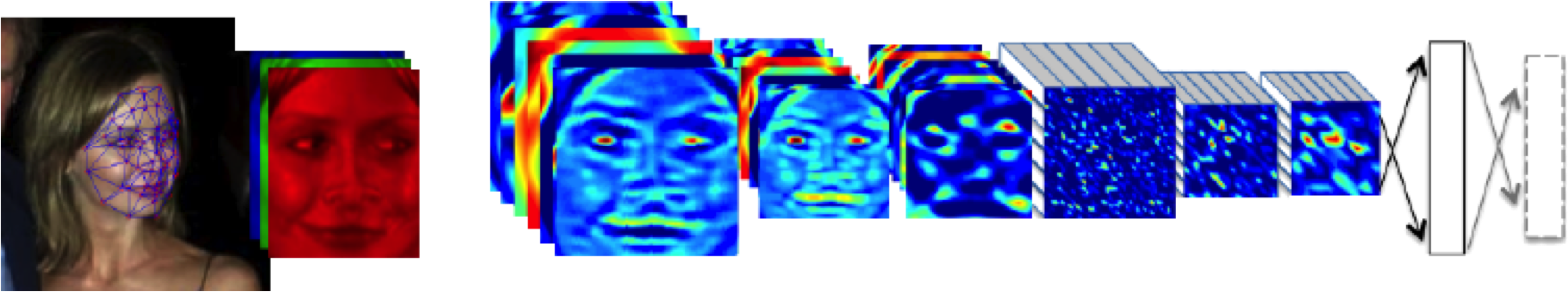

Example: face recognition

Outline of the DeepFace architecture. A front-end of a single convolution-pooling-convolution filtering on the rectified input, followed by three locally-connected layers and two fully-connected layers. Color illustrates feature maps produced at each layer. The net includes more than 120 million parameters, where more than 95% come from the local and fully connected.

What are Large Language Models?

In practice …

There is a lot of evidence that probabilities aren’t interpretable.

See e.g. Thompson (1989)

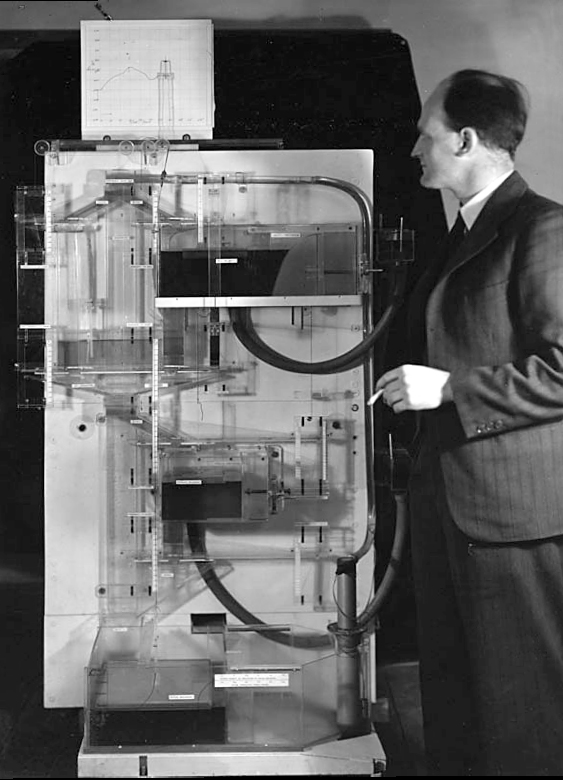

The MONIAC

Donald MacKay

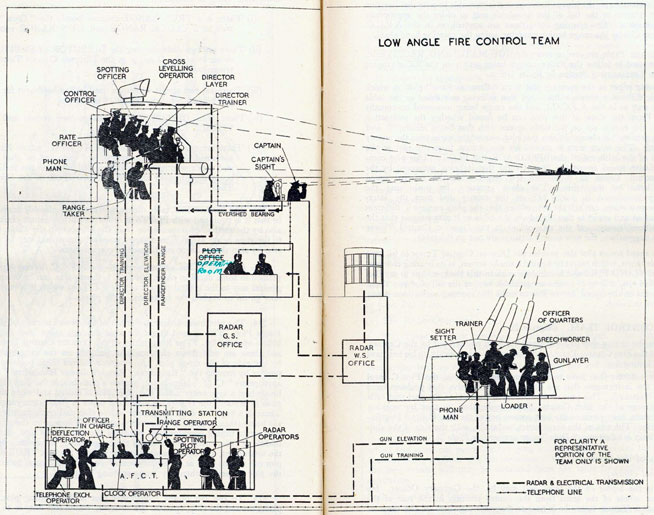

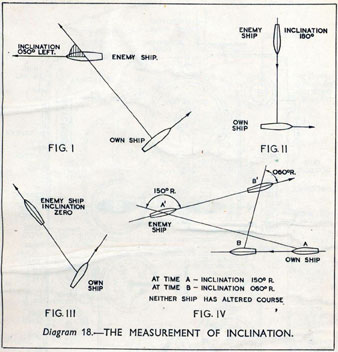

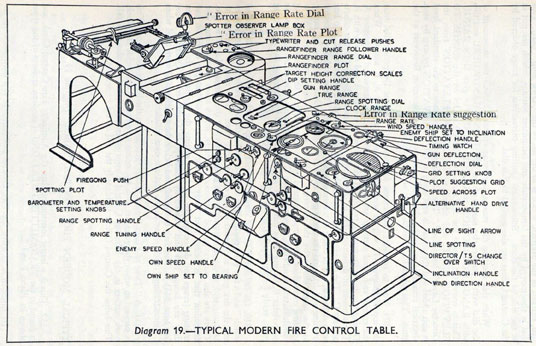

Fire Control Systems

Behind the Eye

Later in the 1940’s, when I was doing my Ph.D. work, there was much talk of the brain as a computer and of the early digital computers that were just making the headlines as “electronic brains.” As an analogue computer man I felt strongly convinced that the brain, whatever it was, was not a digital computer. I didn’t think it was an analogue computer either in the conventional sense.

Human Analogue Machine

Human Analogue Machine

A human-analogue machine is a machine that has created a feature space that is analagous to the “feature space” our brain uses to reason.

The latest generation of LLMs are exhibiting this charateristic, giving them ability to converse.

Counterfeit People

- Perils of this include counterfeit people.

- Daniel Dennett has described the challenges these bring in an article in The Atlantic.

Psychological Representation of the Machine

But if correctly done, the machine can be appropriately “psychologically represented”

This might allow us to deal with the challenge of intellectual debt where we create machines we cannot explain.

In practice …

LLMs are already being used for robot planning Huang et al. (2023)

Ambiguities are reduced when the machine has had large scale access to human cultural understanding.

Inner Monologue

Networked Interactions

Conclusions

- By focussing on the technical side of data science

- We tend to forget about the context of the data.

- Don’t forget that data is almost always about people.

References

Thanks!

twitter: @lawrennd

podcast: The Talking Machines

newspaper: Guardian Profile Page

blog posts: