Abstract

In this lab session we look at working with geospatial data, in conjunction with the house prices dataset you created in the previous practicals.

The check Session for this Practical is 12th November

2024. Prerequisite: practical 1, and a working database with

tables price paid data (i.e., pp_data) and postcodes(i.e.,

postcode_data)

In this lab session we look at working with geospacial data, in conjunction with the house prices dataset you created in the previous practicals. The goal is to enrich the data from the first practical with geographic data enabling better informed data analysis. Access to the price paid database is needed to complete some of the below exercises. You are asked to write reusable code that will help you in the assessment.

Accessing Open Street Maps

Open Street Maps (OSM) is an open geographic database that can provide useful information about different locations and places in the planet. In this example, we will download data about the city of Kampala, Uganda. As always, we should start by installing some Python packages.

%pip install osmnx%pip uninstall --yes matplotlib%pip install matplotlib==3.7.1import osmnx as ox

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings("ignore", category=FutureWarning, module='osmnx')We will download data of Kamplala, Uganda, which has the following latitude and longitude.

place_name = "Kampala, Uganda"

latitude = 0.347596 # Kampala latitude

longitude = 32.582520 # Kampala longitudeplacestub = place_name.lower().replace(' ', '-').replace(',','')We’ll create a bounding box which is 0.02 degrees wide, 1 degree is around 111km (circumference of the Earth is around 40,000 km and 40,000/360=111km). Note: will this approximation work well in all countries?

box_width = 0.02 # About 2.2 km

box_height = 0.02

north = latitude + box_height/2

south = latitude - box_width/2

west = longitude - box_width/2

east = longitude + box_width/2Now we’ll download a set of points of interest from OpenStreetMap. We can specify the points of interest we’re interested in by building a small dictionary containing their labels as follows. A Point of Interest is a location with certain importance in the geographic area. They can vary from amenities to touristic places as you can see in the following.

# Retrieve POIs

tags = {

"amenity": True,

"buildings": True,

"historic": True,

"leisure": True,

"shop": True,

"tourism": True,

"religion": True,

"memorial": True

}We can use osmnx to download all such points of interest

within a given bounding box.

pois = ox.geometries_from_bbox(north, south, east, west, tags)That operation can take some time, particularly as the bounding box grows larger. Once it is complete we can check how many points of interest we have found.

print("There are {number} points of interest surrounding {placename} latitude: {latitude}, longitude: {longitude}".format(number=len(pois), placename=place_name, latitude=latitude, longitude=longitude))And then we can examine their contents in more detail.

poisWe notice a few things:

Points of interest do not have a consistent OpenStreetMap

element_type, some arenode, others arerelationand we also haveway. You can find out more about elements in OpenStreetMap on this wiki page. This will become important when tidying up the data for next stage processing.Many of the values are missing. In SQL we would express a missing value as

NULL. But inpandasa missing value is expressed as not-a-number,NaN. This is quite a common standard, but it is not the only standard. Sometimes data is collected and coded with an “unreasonable” value for a missing value. For example, someone might set missing values for heights to -999. The concept is that this is an obviously void “height” and would trigger a human user to check whether it’s a missing value. Of course, this is obvious to humans, but not necessarily to a computer!

Nodes, ways and relations in OpenStreetMap all have different keys associated with them. The data is not structured in standard database columns. Different points of interest might have different keys present or absent. We might be interested in the following keys.

keys = ["name",

"addr:city",

"addr:postcode",

"amenity",

"building",

"building:name",

"building:colour",

"building:material",

"historic",

"memorial",

"religion",

"tourism",

"emergency",

"leisure",

"shop"]But our downloaded gdf may have fewer keys.

pois.columns.valuesWe can write a short piece of code to discover which keys are missing drom the data frame’s columns.

for key in keys:

if key not in pois.columns:

print(key)

present_keys = [key for key in keys if key in pois.columns]

pois[present_keys]This gives us the relevant points of interest (part of the map). If we’d like to see the entire street network, we can download the entire graph from the location.

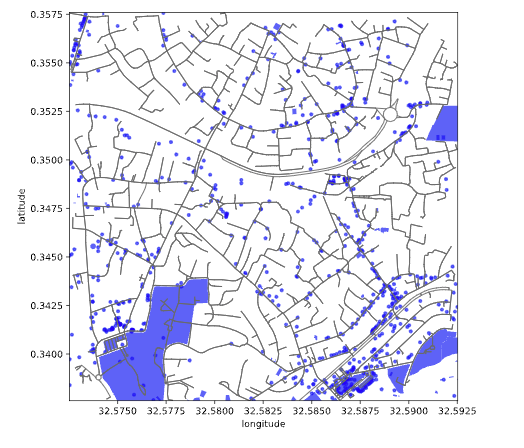

graph = ox.graph_from_bbox(north, south, east, west)

# Retrieve nodes and edges

nodes, edges = ox.graph_to_gdfs(graph)

# Get place boundary related to the place name as a geodataframe

area = ox.geocode_to_gdf(place_name)Which we can then render as follows.

We have the POI information on all tourist places structured in a geodataframe. To work with them in a machine learning algorithm, it will be easier to convert them to a pandas DataFrame.

import pandas as pdpois_df = pd.DataFrame(pois)

pois_df['latitude'] = pois_df.apply(lambda row: row.geometry.centroid.y, axis=1)

pois_df['longitude'] = pois_df.apply(lambda row: row.geometry.centroid.x, axis=1)

tourist_places_df = pois_df[pois_df.tourism.notnull()]

print(len(tourist_places_df))

tourist_places_dfpoi_counts = {}

poi_types =["amenity", "historic", "leisure", "shop", "tourism", "religion", "memorial"]

for tag in poi_types:

if tag in pois_df.columns:

poi_counts[tag] = pois_df[tag].notnull().sum()

else:

poi_counts[tag] = 0

poi_counts_df = pd.DataFrame(list(poi_counts.items()), columns=['POI Type', 'Count'])

poi_counts_dfAssessing the Available OpenStreetMap Features

In the course assessment you will be given the task of constructing a prediction system for various indicators at a given location. We expect that knowledge of the local region around the property should be helpful in making those predictions. To evaluate this we will now look at OpenStreetMap as a data source.

In this section, you should follow the methodology used in the above example to extract summary OSM information that can be useful in making predictions about an area. Use code from the example to construct a function that summarises the number of various points of interest in a target area. You should write reusable code that allows you to explore the characteristics of different points of interest.

def count_pois_near_coordinates(latitude: float, longitude: float, tags: dict, distance_km: float = 1.0) -> dict:

"""

Count Points of Interest (POIs) near a given pair of coordinates within a specified distance.

Args:

latitude (float): Latitude of the location.

longitude (float): Longitude of the location.

tags (dict): A dictionary of OSM tags to filter the POIs (e.g., {'amenity': True, 'tourism': True}).

distance_km (float): The distance around the location in kilometers. Default is 1 km.

Returns:

dict: A dictionary where keys are the OSM tags and values are the counts of POIs for each tag.

"""Now that you have written reusable code, choose the tags you want to

query. This should be different from the tags used in the example. You

can also search for specific tags like this:

"amenity": ["university", ....

# Modify this dict

tags = {

"amenity": ["university"],

"historic": True,

"leisure": True,

"shop": True,

"tourism": True,

"religion": True,

}Here there are 13 UK locations.

locations_dict = {

"Cambridge": (52.2054, 0.1132),

"Oxford": (51.7570, -1.2545),

"Euston Square": (51.5246, -0.1340),

"Temple": (51.5115, -0.1160),

"Kensington": (51.4988, -0.1749),

"Barnsley": (53.5526, -1.4797),

"Mansfield": (53.1472, -1.1987),

"Wakefield": (53.6848, -1.5039),

"Sunderland": (54.9069, -1.3838),

"Rotherham": (53.4300, -1.3568),

"Doncaster": (53.5228, -1.1288),

"Chesterfield": (53.2350, -1.4210),

"Huddersfield": (53.6450, -1.7794)

}Exercise 1

Use your code to query the OSM feature counts for each of them, and combine them into one dataframe.

Exercise 2

Use k-means clustering or another clustering method to try to find clusters of similar areas, based on nearby OSM features.

Exercise 3

Investigate the locations yourself, and assign them categories based on your interpretation. Visualise and compare your manual assignments against your clustering results.

Exercise 4

Normalise your dataframe and compute a distance matrix for the locations. Visualise it, and compare the outcode with your previous clustering results.

Exercise 5

Which features you included were correlated among each other? Investigate and plot a feature correlation matrix. What do these results say about your feature selection?

Joining Spatial Data

Matching OpenStreetMap and House Prices data

In this exercise you will download the geographies of houses from OpenStreetMap and map them to visualise the records you see in the house price dataset. This is a data linking and validation exercise.

The latitude and longitude of Cambridge are as follows:

place_name = "Cambridge"

latitude = 52.1951

longitude = 0.1313We want to execute an SQL query on your database to select all houses in a 1km x 1km region around the centre of Cambridge that have been part of housing transactions since 2020.

This operation can take a very long time. This is because the table

is not indexed on coordinate data, and therefore the query has to check

tens of millions of rows. This can be fixed by constructing an index on

the latitude and longitude values, using

BTREE to make a joint index. Note that indexing can

take a long time. Consider also indexing your table by other

variables you might find useful later.

Exercise 6

Index the table on the coordinate data using a BTREE and

index other columns you might find useful.

Exercise 7

Write an SQL query on your database to select all houses in a 1km x 1km region around the centre of Cambridge that have been part of housing transactions since 2020.

Exercise 8

Get information about all the buildings in that area from

OpenStreetMaps ('building': True). You will need their

address information (addr:housenumber,

addr:street, addr:postcode, …) and geometry

polygon (geometries_from_bbox). Construct a dataframe that

lists all OSM buildings in the area that have a full address, along with

their area (in square meters). Plot a map of the area, using color to

mark the buildings with addresses and the ones without.

Exercise 9

Match the houses you found in the price paid dataset with the buildings on OpenStreetMaps based on their addresses. Can this be applied to all building types? Are there any PP transactions which you couldn’t match to an OSM building, or any OSM buildings you coulnd’t match to a PP transaction? If so, what could be the reason for this? Do you employ any techniques to find non-exact matches? If yes, what matches would you have missed without it? Are you encountering false positive matches? Use this address matching to merge the two dataframes.

Exercise 10

Examine the relationship between the price and area of a property. - What other variables do you need to account for? - Is the correlation as strong as you would expect? - What factors could be impacting this?

Visualise the relationships you found.

Demonstrate the reusability of your code by executing the same analysis for Oxford.

place_name = "Oxford"

latitude = 51.7520

longitude = -1.2577Exercise 11

Replicating the same analysis for Oxford. You do not need to answer all the questions again, but you should show that your code works for this new input without the need to modify it. You should use the Fynesse library for this. Finish by plotting a map of the area and the correlation you find.

Conclusions

You should find some of the code you wrote above useful in your final assessment. Make sure you wrote the code to be reusable and efficient, and do include it in your Fynesse library. The functions you are particularly likely to reuse are the OSM feature search, and map visualisation functions.

Exercise 12

Add relevant code to your Fynesse library. Demonstrate this was successful by installing your library below and calling at least two example functions.

Thanks!

For more information on these subjects and more you might want to check the following resources.

- book: The Atomic Human

- twitter: @lawrennd

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com