Setup

notutils

This small package is a helper package for various notebook utilities used below.

The software can be installed using

%pip install notutilsfrom the command prompt where you can access your python installation.

The code is also available on GitHub: https://github.com/lawrennd/notutils

Once notutils is installed, it can be imported in the

usual manner.

import notutilspods

In Sheffield we created a suite of software tools for ‘Open Data Science’. Open data science is an approach to sharing code, models and data that should make it easier for companies, health professionals and scientists to gain access to data science techniques.

You can also check this blog post on Open Data Science.

The software can be installed using

%pip install podsfrom the command prompt where you can access your python installation.

The code is also available on GitHub: https://github.com/lawrennd/ods

Once pods is installed, it can be imported in the usual

manner.

import podsmlai

The mlai software is a suite of helper functions for

teaching and demonstrating machine learning algorithms. It was first

used in the Machine Learning and Adaptive Intelligence course in

Sheffield in 2013.

The software can be installed using

%pip install mlaifrom the command prompt where you can access your python installation.

The code is also available on GitHub: https://github.com/lawrennd/mlai

Once mlai is installed, it can be imported in the usual

manner.

import mlai

from mlai import plot%pip install statsmodelsReview

We introduced machine learning as a way to extract knowledge from data to make predictions through a prediction function and an objective function. We looked at a simple example of predicting whether someone would buy a jumper based on their age and latitude, using logistic regression to model the log-odds of purchase. This highlighted how machine learning can codify predictions through mathematical functions. This is an example of a broader approach known as generalized linear models.

When taking a probabilistic approach to supervised learning we’re interested in predicting a class label, \(y_i\), given an input, \(\mathbf{ x}_i\). That’s represented probabilisticially as \(p(y_i|\mathbf{ x}_i)\). We can derive this conditional distribution through either (1) modelling the joint distribution, \(p(\mathbf{ y}, \mathbf{X})\) and then dividing by the marginal distribution of the inputs, \(p(\mathbf{X})\) , or (2) focusing specifically on modeling the conditional density, \(p(\mathbf{ y}|\mathbf{X})\), that directly answers our prediction question. In the generalised linear model we choose the second approach.

As we move to generalized linear models like logistic regression, we’ll see how directly modeling the conditional density \(p(\mathbf{ y}|\mathbf{X})\) can provide more flexibility in our modeling assumptions, while still allowing us to make the specific predictions we need.

Linear Regression Reminder

In linear regression, we model the relationship between a continuous response variable \(y_i\) and input variables \(\mathbf{ x}_i\) through a linear function with Gaussian noise:

\[y_i = f(\mathbf{ x}_i) + \epsilon_i\]

where \(f(\mathbf{ x}_i) = \mathbf{ w}^\top\mathbf{ x}_i = \sum_{j=1}^D w_jx_{i,j}\) and \(\epsilon_i \sim \mathcal{N}\left(0,\sigma^2\right)\)

This gives us a probabilistic model:

\[p(y_i|\mathbf{ x}_i) = \gaussianDist{\mathbf{ w}^\top\mathbf{ x}_i}{\sigma^2}\]

The key components are: - \(y_i\) is

the target/response variable we want to predict - \(\mathbf{ x}_i\) contains the input

features/explanatory variables

- \(\mathbf{ w}\) contains the

parameters/coefficients we learn - \(\epsilon_i\) represents random Gaussian

noise with variance \(\sigma^2\)

For the full dataset, we can write this in matrix form:

\[\mathbf{ y}= \mathbf{X}\mathbf{ w}+ \boldsymbol{ \epsilon}\]

where \(\mathbf{ y}= [y_1,\ldots,y_N]^\top\), \(\mathbf{X}\) contains the input vectors as rows, and \(\boldsymbol{ \epsilon}\sim \mathcal{N}\left(\mathbf{0},\sigma^2\mathbf{I}\right)\).

The expected value of our prediction is:

\[\mathbb{E}[y_i|\mathbf{ x}_i] = \mathbf{ w}^\top\mathbf{ x}_i\]

This linear model forms the foundation for generalized linear models like logistic regression, where we’ll adapt the model for classification by transforming the output through a non-linear function.

import statsmodels.api as sm

import pods# Demo of linear regression using python statsmodels.

data = pods.datasets.olympic_marathon_men()

x = data['X']

y = data['Y']

# Add constant term to design matrix

x = sm.add_constant(x)

model = sm.OLS(y, x)

results = model.fit()

results.summary()The statsmodels summary provides several key diagnostic measures that help us evaluate our model fit and identify potential areas for improvement. Since we’re working with one-dimensional data (year vs time), we can visualize everything easily to complement these statistical measures.

The model fit statistics show a moderately strong fit, with an R-squared of 0.744 indicating that our model explains 74.4% of the variance in the data. The adjusted R-squared of 0.733 confirms this isn’t just due to overfitting. The very low F-statistic p-value (7.40e-09) confirms the model’s overall significance. The AIC (10.08) and BIC (12.67) values will be useful when we compare this model against alternative specifications we might try.

Looking at the model parameters, we see a coefficient of -0.013 for our predictor, with a small standard error (0.002). The t-statistic of -8.515 and p-value of 0.000 indicate this effect is highly significant. The 95% confidence interval [-0.016, -0.010] gives us good confidence in our estimate. The negative coefficient confirms the expected downward trend in marathon times over the years.

However, the residual diagnostics suggest several potential issues we should investigate:

- The Durbin-Watson statistic (1.110) indicates positive

autocorrelation in the residuals, though not as severe as we might

expect. This suggests we might want to:

- Consider time series modeling approaches

- Add polynomial terms to capture non-linear trends

- Investigate if there are distinct “eras” in marathon times

- The highly significant Jarque-Bera test (p-value 7.67e-12) tells us

our residuals aren’t normally distributed. The skew (1.929) and kurtosis

(8.534) values show the distribution is strongly right-skewed with very

heavy tails. We might want to:

- Look for outliers or influential points

- Consider robust regression techniques

- Try transforming our response variable

- The large condition number (1.08e+05) suggests potential numerical

instability or multicollinearity issues. While less concerning with

single-predictor models, we should:

- Consider centering and scaling our predictor

- Watch for numerical precision issues

- Be cautious when extending to multiple predictors

The beauty of having one-dimensional data is that we can plot everything to visually confirm these statistical findings. A scatter plot with our fitted line will help us: - Visually assess the linearity assumption - Identify potential outliers - Spot any systematic patterns in the residuals - See if the relationship makes practical sense in terms of marathon performance over time

This visual inspection, combined with our statistical diagnostics, will guide our next steps in improving the model.

Looking at our plot and model diagnostics, we can now better understand the large condition number (1.08e+05) in our results. This high value likely stems from using raw year values (e.g., 1896, 1900, etc.) as our predictor variable. Such large numbers can lead to numerical instability in the computations.

To address this, we could consider: - Centering the years around their mean - Scaling the years to a smaller range (e.g., 0-1) - Using years since the first Olympics (e.g., 0, 4, 8, etc.)

Any of these transformations would help reduce the condition number while preserving the underlying relationship in our data. The coefficients would change, but the fitted values and overall model quality would remain the same.

Figure: Linear regression fit to Olympic marathon men’s times using

statsmodels.

The plot reveals several key features that help explain our diagnostic statistics:

- The 1904 St. Louis Olympics appears as a clear outlier, contributing to the non-normal residuals (Jarque-Bera p=0.00432) and right-skewed distribution (skew=1.385)

- We can observe distinct regimes in the data:

- Rapid improvement in times pre-WWI

- Disruption and variation during the war years

- More steady, consistent progress post-WWII

- These regime changes help explain the strong positive autocorrelation (Durbin-Watson=0.242) in our residuals

- While our high R-squared (0.972) captures the overall downward

trend, these features suggest we could improve the model by adding

additional features:

- Polynomial terms to capture non-linear trends

- Indicator variables for different time periods

- Interaction terms between features

- Variables accounting for external factors like temperature or course conditions

To incorporate multiple features into our model, we need a systematic way to organize this additional information. This brings us to the concept of the design matrix.

Design Matrix

The design matrix, often denoted as \(\designMatrix\), is a key component of a statistical model. It organizes our feature data in a structured way that facilitates model fitting and analysis. Each row of the design matrix represents a single observation or data point, while each column represents a different feature or predictor variable.

For \(n\) observations and \(p\) features, the design matrix takes the form:

\[\designMatrix = \begin{bmatrix} x_{11} & x_{12} & \cdots & x_{1p} \\ x_{21} & x_{22} & \cdots & x_{2p} \\ \vdots & \vdots & \ddots & \vdots \\ x_{n1} & x_{n2} & \cdots & x_{np} \end{bmatrix}\]

For example, if we’re predicting house prices, each row might represent a different house, with columns for features like:

- Square footage

- Number of bedrooms

- Year built

- Lot size

The design matrix provides a compact way to represent all our feature data and is used directly in model fitting. When we write our linear model as \(\mathbf{ y}= \designMatrix\mathbf{ w}+ \boldsymbol{ \epsilon}\), the design matrix \(\designMatrix\) is multiplied by our parameter vector \(\mathbf{ w}\) to generate predictions.

The design matrix often includes a column of 1s to account for the intercept term in our model. This allows us to write the model in matrix form without explicitly separating out the intercept term.

import statsmodels.api as sm

import pods

import numpy as np# Demo of additional features with interactions regression usying python statsmodels.

data = pods.datasets.olympic_marathon_men()

x = data['X']

y = data['Y']

# Scale the year to avoid numerical issues

x_scaled = (x - 1900) / 100 # Center around 1900 and scale to century units

# Add to design matrix indicator variable for pre-1914

x_aug = np.hstack([x_scaled, (x[:, 0] < 1914).astype(np.float64)[:, np.newaxis]])

# Add to design matrix indicator variable for 1914-1945

x_aug = np.hstack([x_aug, ((x[:, 0] >= 1914) & (x[:, 0] <= 1945)).astype(np.float64)[:, np.newaxis]])

# Add to design matrix indicator variable for post-1945

x_aug = np.hstack([x_aug, (x[:, 0] > 1945).astype(np.float64)[:, np.newaxis]])

# Add product terms that multiply the scaled year and the indicator variables.

x_aug = np.hstack([x_aug, x_scaled[:, 0:1] * x_aug[:, 1:2], x_scaled[:, 0:1] * x_aug[:, 2:3]])

# Add constant term to design matrix

x_aug = sm.add_constant(x_aug)

# Do the linear fit

model = sm.OLS(y, x_aug)

results = model.fit()

results.summary()

Figure: Polynomial regression fit to Olympic marathon men’s times

using statsmodels.

The augmented model with interactions shows a significant improvement in fit compared to the simpler linear model, with an R-squared value of 0.870 (adjusted R-squared of 0.839). This indicates that about 87% of the variance in marathon times is explained by our model.

The model includes several key components: - A base time trend (x1 coefficient: -0.6737) - Indicator variables for different historical periods (pre-1914, 1914-1945, post-1945) - Interaction terms between the time trend and these periods

The coefficients reveal interesting patterns: - The pre-1914 period shows a significant positive effect (x2: 1.5506, p<0.001) - The wartime period 1914-1945 also shows a positive effect (x3: 0.7982, p<0.05) - The post-1945 period has a positive effect (x4: 0.6883, p<0.01) - The interaction terms (x5, x6) suggest different rates of improvement in different periods, though these are less statistically significant

However, there are some concerns: 1. The very high condition number (2.79e+16) suggests serious multicollinearity issues 2. The Jarque-Bera test (p<0.001) indicates non-normal residuals 3. There’s significant skewness (2.314) and kurtosis (10.325) in the residuals

Despite these statistical issues, the model captures the major trends in marathon times across different historical periods better than a simple linear regression would.

Logistic Regression

A logistic regression is an approach to classification which extends the linear basis function models we’ve already explored. Rather than modeling the output of the function directly the assumption is that we model the log-odds with the basis functions.

The odds are defined as the ratio of the probability of a positive outcome, to the probability of a negative outcome. Just as we saw in our jumper (sweater) example where:

\[ \log \frac{p(\text{bought})}{p(\text{not bought})} = w_0 + w_1 \text{age} + w_2 \text{latitude} \]

If the probability of a positive outcome is denoted by \(\pi\), then the odds are computed as \(\frac{\pi}{1-\pi}\). Odds are widely used by bookmakers in gambling, although a bookmakers odds won’t normalise: i.e. if you look at the equivalent probabilities, and sum over the probability of all outcomes the bookmakers are considering, then you won’t get one. This is how the bookmaker makes a profit. Because a probability is always between zero and one, the odds are always between \(0\) and \(\infty\). If the positive outcome is unlikely the odds are close to zero, if it is very likely then the odds become close to infinite. Taking the logarithm of the odds maps the odds from the positive half space to being across the entire real line. Odds that were between 0 and 1 (where the negative outcome was more likely) are mapped to the range between \(-\infty\) and \(0\). Odds that are greater than 1 are mapped to the range between \(0\) and \(\infty\). Considering the log odds therefore takes a number between 0 and 1 (the probability of positive outcome) and maps it to the entire real line. The function that does this is known as the logit function, \(g^{-1}(p_i) = \log\frac{p_i}{1-p_i}\). This function is known as a link function.

For a standard regression we take, \[ f(\mathbf{ x}) = \mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}), \] if we want to perform classification we perform a logistic regression. \[ \log \frac{\pi}{(1-\pi)} = \mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}) \] where the odds ratio between the positive class and the negative class is given by \[ \frac{\pi}{(1-\pi)} \] The odds can never be negative, but can take any value from 0 to \(\infty\). We have defined the link function as taking the form \(g^{-1}(\cdot)\) implying that the inverse link function is given by \(g(\cdot)\). Since we have defined, \[ g^{-1}(\pi) = \mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}) \] we can write \(\pi\) in terms of the inverse link function, \(g(\cdot)\) as \[ \pi = g(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})). \]

Basis Function

We’ll define our prediction, objective and gradient functions below. But before we start, we need to define a basis function for our model. Let’s start with the linear basis.

import numpy as npimport mlai

from mlai import linearPrediction Function

Now we have the basis function let’s define the prediction function.

import numpy as npdef predict(w, x, basis=linear, **kwargs):

"Generates the prediction function and the basis matrix."

Phi = basis(x, **kwargs)

f = np.dot(Phi, w)

return 1./(1+np.exp(-f)), PhiThis inverse of the link function is known as the logistic (thus the name logistic regression) or sometimes it is called the sigmoid function. For a particular value of the input to the link function, \(f_i = \mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}_i)\) we can plot the value of the inverse link function as below.

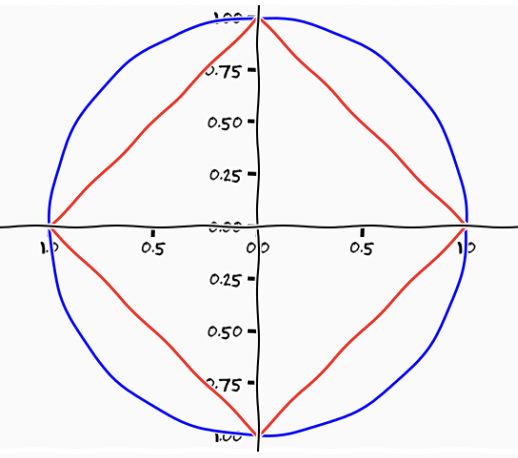

Sigmoid Function

Figure: The logistic function.

The function has this characeristic ‘s’-shape (from where the term sigmoid, as in sigma, comes from). It also takes the input from the entire real line and ‘squashes’ it into an output that is between zero and one. For this reason it is sometimes also called a ‘squashing function’.

By replacing the inverse link with the sigmoid we can write \(\pi\) as a function of the input and the parameter vector as, \[ \pi(\mathbf{ x},\mathbf{ w}) = \frac{1}{1+\exp\left(-\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\right)}. \] The process for logistic regression is as follows. Compute the output of a standard linear basis function composition (\(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\), as we did for linear regression) and then apply the inverse link function, \(g(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}))\). In logistic regression this involves squashing it with the logistic (or sigmoid) function. Use this value, which now has an interpretation as a probability in a Bernoulli distribution to form the likelihood. Then we can assume conditional independence of each data point given the parameters and develop a likelihod for the entire data set.

As we discussed last time, the Bernoulli likelihood is of the form, \[ P(y_i|\mathbf{ w}, \mathbf{ x}) = \pi_i^{y_i} (1-\pi_i)^{1-y_i} \] which we can think of as clever trick for mathematically switching between two probabilities if we were to write it as code it would be better described as

def bernoulli(x, y, pi):

if y == 1:

return pi(x)

else:

return 1-pi(x)but writing it mathematically makes it easier to write our objective function within a single mathematical equation.

Maximum Likelihood

To obtain the parameters of the model, we need to maximize the likelihood, or minimize the objective function, normally taken to be the negative log likelihood. With a data conditional independence assumption the likelihood has the form, \[ P(\mathbf{ y}|\mathbf{ w}, \mathbf{X}) = \prod_{i=1}^nP(y_i|\mathbf{ w}, \mathbf{ x}_i). \] which can be written as a log likelihood in the form \[ \log P(\mathbf{ y}|\mathbf{ w}, \mathbf{X}) = \sum_{i=1}^n\log P(y_i|\mathbf{ w}, \mathbf{ x}_i) = \sum_{i=1}^n y_i \log \pi_i + \sum_{i=1}^n(1-y_i)\log (1-\pi_i) \] and if we take the probability of positive outcome for the \(i\)th data point to be given by \[ \pi_i = g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}_i)\right), \] where \(g(\cdot)\) is the inverse link function, then this leads to an objective function of the form, \[ E(\mathbf{ w}) = - \sum_{i=1}^ny_i \log g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}_i)\right) - \sum_{i=1}^n(1-y_i)\log \left(1-g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x}_i)\right)\right). \]

import numpy as npdef objective(g, y):

"Computes the objective function."

labs = np.asarray(y, dtype=float).flatten()

posind = np.where(labs==1)

negind = np.where(labs==0)

return -np.log(g[posind, :]).sum() - np.log(1-g[negind, :]).sum()As normal, we would like to minimize this objective. This can be done by differentiating with respect to the parameters of our prediction function, \(\pi(\mathbf{ x};\mathbf{ w})\), for optimisation. The gradient of the likelihood with respect to \(\pi(\mathbf{ x};\mathbf{ w})\) is of the form, \[ \frac{\text{d}E(\mathbf{ w})}{\text{d}\mathbf{ w}} = -\sum_{i=1}^n \frac{y_i}{g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\right)}\frac{\text{d}g(f_i)}{\text{d}f_i} \boldsymbol{ \phi}(\mathbf{ x}_i) + \sum_{i=1}^n \frac{1-y_i}{1-g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\right)}\frac{\text{d}g(f_i)}{\text{d}f_i} \boldsymbol{ \phi}(\mathbf{ x}_i) \] where we used the chain rule to develop the derivative in terms of \(\frac{\text{d}g(f_i)}{\text{d}f_i}\), which is the gradient of the inverse link function (in our case the gradient of the sigmoid function).

So the objective function now depends on the gradient of the inverse link function, as well as the likelihood depends on the gradient of the inverse link function, as well as the gradient of the log likelihood, and naturally the gradient of the argument of the inverse link function with respect to the parameters, which is simply \(\boldsymbol{ \phi}(\mathbf{ x}_i)\).

The only missing term is the gradient of the inverse link function. For the sigmoid squashing function we have, \[\begin{align*} g(f_i) &= \frac{1}{1+\exp(-f_i)}\\ &=(1+\exp(-f_i))^{-1} \end{align*}\] and the gradient can be computed as \[\begin{align*} \frac{\text{d}g(f_i)}{\text{d} f_i} & = \exp(-f_i)(1+\exp(-f_i))^{-2}\\ & = \frac{1}{1+\exp(-f_i)} \frac{\exp(-f_i)}{1+\exp(-f_i)} \\ & = g(f_i) (1-g(f_i)) \end{align*}\] so the full gradient can be written down as \[ \frac{\text{d}E(\mathbf{ w})}{\text{d}\mathbf{ w}} = -\sum_{i=1}^n y_i\left(1-g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\right)\right) \boldsymbol{ \phi}(\mathbf{ x}_i) + \sum_{i=1}^n (1-y_i)\left(g\left(\mathbf{ w}^\top \boldsymbol{ \phi}(\mathbf{ x})\right)\right) \boldsymbol{ \phi}(\mathbf{ x}_i). \]

import numpy as npdef gradient(g, Phi, y):

"Generates the gradient of the parameter vector."

labs = np.asarray(y, dtype=float).flatten()

posind = np.where(labs==1)

dw = -(Phi[posind]*(1-g[posind])).sum(0)

negind = np.where(labs==0 )

dw += (Phi[negind]*g[negind]).sum(0)

return dw[:, None]Optimization of the Function

Reorganizing the gradient to find a stationary point of the function with respect to the parameters \(\mathbf{ w}\) turns out to be impossible. Optimization has to proceed by numerical methods. Options include the multidimensional variant of Newton’s method or gradient based optimization methods like we used for optimizing matrix factorization for the movie recommender system. We recall from matrix factorization that, for large data, stochastic gradient descent or the Robbins Munro (Robbins and Monro, 1951) optimization procedure worked best for function minimization.

Logistic regression is a widely used technique in many real world application domains. Within social media, the first wave of machine learning solutions were targeted at challenges such as ad matching or news-post ranking. In these domains the users are characterised by their previous behaviour (such as which posts they have liked) and other contextual information (such as which friends they have). The logistic regression approach is then used to predict which ads should be shown. For example Facebook’s ad matching system faces the complex challenge of connecting millions of advertisers with billions of users. The scale of this matching problem is immense:

- Facebook has millions of potential advertisers, each with different target audiences and budgets

- There are billions of users, each with unique interests and behaviors

- Decisions about which ads to show must be made in real-time

- The system needs to balance between user experience and advertiser ROI

Facebook’s solution employed a system that combines logistic regression with decision trees. The system considers various features including:

- User demographics and behaviour

- Historical ad performance

- Advertiser parameters and budgets

- Contextual information

The hybrid approach allows Facebook to make rapid predictions about ad performance while handling the massive scale of their platform. The details of this system are described in a technical paper by Herbrich et al., which describes Facebook’s click prediction system for online advertising, which combines decision trees with logistic regression to predict ad clicks. At the time Facebook had over 750 million daily active users and 1 million advertisers at the time. The authors found that a hybrid model outperformed either decision trees or logistic regression alone.

Importantly, from our perspective, the researchers found that feature selection was the most critical factor - particularly choosing the features that capture historical information about users and ads. Given the ‘right’ features and model architecture, adapting the system to accommodate other aspects such as data freshness and learning rate tuning provided smaller incremental gains. To handle the scale of the task the system used a cascade of classifies to handle thecandidate ads per request efficiently. The hybrid model served as the final stage classifier. The paper also discusses practical considerations around implementing online learning and managing computational resources at scale.

Olivetti Glasses Data

Let’s classify images with logistic regression. We’ll look at a data

set of individuals with glasses. We can load in the data from

pods as

Correspond to whether the subject of the image is wearing glasses. Set up the ipython environment and download the data:

from scipy import ioFirst let’s retrieve some data. We will use the ORL faces data set, our objective will be to classify whether or not the subject in an image is wearing glasess.

Here’s a simple way to visualise the data. Each pixel in the image will become an input to the GP.

import podsdata = pods.datasets.olivetti_glasses()

X = data['X']

y = data['Y']

Xtest = data['Xtest']

ytest = data['Ytest']

print(data['info'], data['details'], data['citation'])

Figure: Image from the Oivetti glasses data sets.

Batch Gradient Descent

We will need to define some initial random values for our vector and then minimize the objective by descending the gradient.

# Separate train and test

indices = np.random.permutation(X.shape[0])

num_train = np.ceil(X.shape[0]/2)r

train_indices = indices[:num_train]

test_indices = indices[num_train:]

X_train = X.iloc[train_indices]

y_train = y.iloc[train_indices]==True

X_test = X.iloc[test_indices]

y_test = y.iloc[test_indices]==Trueimport numpy as np# gradient descent algorithm

w = np.random.normal(size=(X.shape[1]+1, 1), scale = 0.001)

eta = 1e-9

iters = 10000

for i in range(iters):

g, Phi = predict(w, X_train, linear)

w -= eta*gradient(g, Phi, y_train) + 0.001*w

if not i % 100:

print("Iter", i, "Objective", objective(g, y_train))Let’s look at the weights and how they relate to the inputs.

import matplotlib.pyplot as pltprint(w)What does the magnitude of the weight vectors tell you about the different parameters and their influence on outcome? Are the weights of roughly the same size, if not, how might you fix this?

g_test, Phi_test = predict(w, X_test, linear)

np.sum(g_test[y_test]>0.5)Stochastic Gradient Descent

Exercise 1

Now construct a stochastic gradient descent algorithm and run it on the data. Is it faster or slower than batch gradient descent? What can you do to improve convergence speed?

Using Statsmodels

The statsmodels package provides a more convenient way to fit logistic regression models with additional statistical analysis capabilities.

import statsmodels.api as sm# Add constant term to X_train and X_test for intercept

X_train_sm = sm.add_constant(X_train)

X_test_sm = sm.add_constant(X_test)

# Fit logistic regression

model = sm.Logit(y_train, X_train_sm)

results = model.fit()

# Print summary of model results

print(results.summary())

# Make predictions on test set

y_pred = results.predict(X_test_sm)

print("\nTest set accuracy:", np.mean((y_pred > 0.5) == y_test))The statsmodels implementation provides additional statistical metrics like p-values, confidence intervals, and various diagnostic tests that can help evaluate the model’s fit and assumptions.

Going Further: Optimization

Other optimization techniques for generalized linear models include Newton’s method, it requires you to compute the Hessian, or second derivative of the objective function.

Methods that are based on gradients only include L-BFGS and conjugate gradients. Can you find these in python? Are they suitable for very large data sets? }

Other GLMs

We’ve introduced the formalism for generalized linear models. Have a

think about how you might model count data using the Poisson

distribution and a log link function for the rate, \(\lambda(\mathbf{ x})\). If you want a data

set you can try the pods.datasets.google_trends() for some

count data.

Poisson Distribution

Figure: The Poisson distribution.

Poisson Regression

Poisson regression is a type of generalized linear model (GLM) used when modeling count data. It assumes the response variable follows a Poisson distribution and uses a logarithmic link function to relate the mean of the response to the linear predictor.

In this model, we make the rate parameter λ a function of covariates (like space or time). The logarithm of the rate is modeled as a linear combination of the input features:

\[\log \lambda(\mathbf{ x}, t) = \mathbf{ w}_x^\top \boldsymbol{ \phi}_x(\mathbf{ x}) + \mathbf{ w}_t^\top \boldsymbol{ \phi}_t(t)\]

where: - \(\mathbf{ w}_x\) and \(\mathbf{ w}_t\) are parameter vectors - \(\boldsymbol{ \phi}_x(\mathbf{ x})\) and \(\boldsymbol{ \phi}_t(t)\) are basis functions for space and time respectively

This formulation is known as a log-linear or log-additive model because we’re adding terms in the log space. The logarithm serves as our link function, connecting the linear predictor to the response variable’s mean.

An important characteristic of this model that practitioners should be aware of is that while we add terms in the log space, the model becomes multiplicative when we transform back to the original space. This happens because:

We start with the log-additive form: \(\log \lambda(\mathbf{ x}, t) = f_x(\mathbf{ x}) + f_t(t)\)

When we exponentiate both sides to get back to λ, the addition in log space becomes multiplication: \[\lambda(\mathbf{ x}, t) = \exp(f_x(\mathbf{ x}) + f_t(t)) = \exp(f_x(\mathbf{ x}))\exp(f_t(t))\]

This multiplicative nature has important implications for interpretation. For example, if we increase one input variable, it has a multiplicative effect on the rate, not an additive one. This can lead to rapid growth in the predicted counts as input values increase.

import pods

import statsmodels.api as smdata = pods.datasets.google_trends(['xbox one', 'wii u', 'ps4'])

x = data['X']

y = data['Y']

model = sm.GLM(y, sm.add_constant(x), family=sm.families.Poisson())

result = model.fit()The statsmodels library in Python provides a convenient

way to fit Poisson regression models. The sm.GLM function

is used to fit generalized linear models, and we specify the Poisson

family to indicate that we’re modeling count data. The

sm.add_constant(x) function adds a column of ones to the

design matrix to account for the intercept term.

Figure: Google Trends Search Queries for Xbox One, Wii U, and PS4.

Figure: Google Trends Search Queries for Xbox One, Wii U, and PS4.

Let’s look at another example using synthetic data to demonstrate Poisson regression without relying on external APIs.

import numpy as np

import statsmodels.api as sm# Generate some example count data

np.random.seed(42)

n_samples = 100

x1 = np.random.uniform(0, 10, n_samples)

x2 = np.random.uniform(0, 5, n_samples)

X = np.column_stack((x1, x2))

# True relationship: y ~ Poisson(exp(1 + 0.3*x1 - 0.2*x2))

lambda_true = np.exp(1 + 0.3*x1 - 0.2*x2)

y = np.random.poisson(lambda_true)

# Fit Poisson regression

model_synthetic = sm.GLM(y, sm.add_constant(X), family=sm.families.Poisson())

result_synthetic = model_synthetic.fit()In this synthetic example, we generate count data that follows a Poisson distribution where the rate parameter λ depends on two predictor variables. This demonstrates how Poisson regression can model count data with multiple predictors.

Figure: Diagnostic plots for the Poisson regression model showing actual vs predicted counts and residual analysis.

Practical Tips

When working with generalised linear models in practice, there are several key considerations that can significantly impact model performance:

Feature engineering is often the most critical factor in model success:

- Build modular data processing pipelines that allow you to easily test different feature sets. For example, if modeling house prices, you might want to test combinations of raw features (square footage, bedrooms), derived features (price per square foot), and interaction terms (bedrooms × bathrooms).

- Consider non-linear transformations of continuous variables. For instance, taking the log of price data often helps normalize distributions.

- Be thoughtful about encoding categorical variables - one-hot encoding isn’t always optimal. For high-cardinality categories, consider target encoding or feature hashing.

- Scale features appropriately - standardization or min-max scaling depending on your model assumptions.

- Document your feature creation process thoroughly, including the rationale for each transformation.

Model validation requires careful consideration:

- Cross-validation should match your real-world use case. For time series data, use time-based splits rather than random splits.

- Bootstrap sampling helps understand parameter uncertainty. For example, bootstrapping can show if a coefficient’s sign might flip under different samples.

- Hold-out test sets should be truly independent. In a customer churn model, this might mean testing on future customers rather than a random subset.

- Watch for data leakage, especially with time-dependent features. If predicting customer churn, using future purchase data would create leakage.

Diagnostic checks are essential for model reliability:

- Create residual plots against fitted values and each predictor. Look for systematic patterns - a U-shaped residual plot suggests missing quadratic terms.

- For logistic regression, plot predicted probabilities against actual outcomes in bins to check calibration.

- Calculate influence measures like Cook’s distance to identify outliers. In a house price model, a mansion might have outsized influence on coefficients.

- Check Variance Inflation Factors (VIF) for multicollinearity. High VIF (>5-10) suggests problematic correlation between predictors.

Visualization remains crucial throughout:

- Before modeling, create scatter plots, box plots, and histograms to understand your data distribution and relationships.

- Use pairs plots to identify correlations and potential interactions between features.

- Create residual diagnostic plots including Q-Q plots for normality checking.

- When communicating results, focus on interpretable visualizations. For instance, partial dependence plots can show how predictions change with a single feature.

Additional practical considerations:

- Start simple and add complexity incrementally. A basic linear model often provides a good baseline.

- Keep track of model performance metrics across iterations to ensure changes actually improve results.

- Consider the computational cost of feature engineering - some transformations might not be feasible in production.

- Think about how features will be available in production. If a feature requires complex processing or external data, it might not be practical.

- For categorical variables with many levels, consider grouping rare categories.

- When dealing with missing data, document your imputation strategy and test its impact on model performance.

Further Reading

- Section 5.2.2 up to pg 182 of Rogers and Girolami (2011)

Thanks!

For more information on these subjects and more you might want to check the following resources.

- book: The Atomic Human

- twitter: @lawrennd

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com